Assessing Patient Comprehension in Informed Consent: Strategies, Challenges, and Future Directions for Clinical Research

This comprehensive review addresses the critical challenge of patient comprehension in the informed consent process, a fundamental ethical requirement in clinical research and drug development.

Assessing Patient Comprehension in Informed Consent: Strategies, Challenges, and Future Directions for Clinical Research

Abstract

This comprehensive review addresses the critical challenge of patient comprehension in the informed consent process, a fundamental ethical requirement in clinical research and drug development. Despite its importance, systematic evidence reveals significant gaps in patients' understanding of core consent elements such as randomization, risks, and therapeutic alternatives. This article synthesizes current empirical findings on comprehension barriers, evaluates methodological approaches for assessment, identifies systemic and communication-related challenges, and explores innovative solutions and validation frameworks. Targeting researchers, scientists, and drug development professionals, the content provides evidence-based strategies to enhance consent processes, improve patient understanding, and uphold ethical standards in clinical trials through technological integration and standardized assessment protocols.

The Comprehension Gap: Understanding the Current State of Patient Understanding in Informed Consent

The Ethical and Legal Imperative of Adequate Comprehension in Informed Consent

The legal doctrine of informed consent represents a cornerstone of ethical research and clinical practice, historically emphasizing information disclosure as its primary requirement. However, mounting evidence reveals that despite technically adequate disclosures, patients and research participants often struggle with substantive comprehension of risks, benefits, and alternatives [1]. This gap between disclosure and understanding undermines the ethical foundation of informed consent—the principle of respect for personal autonomy [2]. Within the context of a broader thesis on assessing patient comprehension, this application note establishes that moving beyond mere disclosure to ensure adequate comprehension represents both an ethical imperative and a evolving legal standard. We present standardized protocols and analytical frameworks to systematically integrate comprehension assessment into informed consent processes, particularly addressing challenges posed by digital health research and diverse participant populations [3].

Quantitative Evidence: The Comprehension Gap and Intervention Efficacy

Readability Deficits in Current Materials

Recent studies consistently demonstrate that patient and research materials routinely exceed recommended readability levels, creating structural barriers to comprehension. The following table synthesizes key findings across healthcare and research contexts:

| Material Type | Recommended Reading Level | Actual Reading Level (Mean) | Primary Assessment Tool | Study Reference |

|---|---|---|---|---|

| Online Patient Education Materials (PEMs) from Major Health Associations | 6th grade | 9.6 - 10.7 | Flesch-Kincaid Grade Level (FKGL) | [4] |

| Institutional PEMs in Academic Health Systems | 5th-6th grade | Above 8th grade | Simple Measure of Gobbledygook (SMOG) Index | [5] |

| Digital Health Research Consent Forms | 6th-8th grade | Not specified | Character length, lexical density | [3] |

Efficacy of Readability Interventions

Multiple interventions have demonstrated effectiveness in improving readability metrics. The following table quantifies the impact of Large Language Model (LLM) optimization on patient education materials:

| LLM Intervention | Pre-Intervention Grade Level | Post-Intervention Grade Level | Reduction in Word Count | Accuracy Rate |

|---|---|---|---|---|

| ChatGPT (GPT-4) | 10.1 | 7.6 | 51.8% (410.9 to 198.1 words) | 100% |

| Gemini (Gemini-1.5-flash) | 10.1 | 6.6 | 59.4% (410.9 to 166.7 words) | 96.7% |

| Claude (Claude 3.5 Sonnet) | 10.1 | 5.6 | 57.1% (410.9 to 176.2 words) | 96.7% |

Beyond readability metrics, studies evaluating participant preferences for consent communication formats reveal important demographic variations. In digital health research, when original consent text character length was longer, participants were 1.20 times more likely to prefer modified, more readable text (P=.04), with this preference being particularly strong for snippets explaining study risks (P=.03) [3]. Furthermore, older participants preferred original consent language 1.95 times more than younger participants (P=.004), highlighting how demographic factors influence communication preferences [3].

Experimental Protocols for Comprehension Assessment

Protocol 1: Text Snippet Preference Testing for Consent Form Optimization

This protocol evaluates participant preferences between original and readability-modified consent form sections, identifying optimal communication strategies for specific study populations [3].

Materials and Reagents:

- Institutional Review Board (IRB)-approved original consent form

- Readability analysis software (e.g., Readability Calculator)

- Digital survey platform with randomization capabilities

- Secure data storage system (HIPAA-compliant cloud storage)

Procedure:

- Text Preparation: Deconstruct the IRB-approved consent form into 25-35 logical paragraph-length "snippets" covering all consent domains (procedures, risks, benefits, data usage, etc.).

- Readability Modification: Independently have three research team members modify each snippet using readability software to improve Flesch-Kincaid Reading Ease, reduce character length, and optimize lexical density.

- Expert Consensus: Convene the research team to compare modified versions against originals and reach consensus on the "most readable" version for each snippet.

- Survey Programming: Program a digital survey presenting participants with paired snippets (original vs. modified) in random order, requiring preference selection for each pair.

- Participant Recruitment: Recruit participants meeting eligibility criteria for the actual study (N=75-100 provides adequate power for most analyses).

- Data Collection: Administer survey, collecting preference data alongside demographic characteristics (age, sex, ethnicity, education, technology familiarity).

- Statistical Analysis: Employ multivariate regression models to identify associations between participant characteristics and text preferences, with particular attention to risk-related sections.

Validation Metrics:

- Quantitative preference ratios between original and modified texts

- Statistical significance of demographic variables on preferences (P<.05)

- Effect sizes for character length reduction on preference selection

Protocol 2: LLM-Assisted Readability Optimization with Human Validation

This protocol utilizes large language models to systematically improve consent form readability while maintaining accuracy through structured human oversight [4].

Materials and Reagents:

- Source patient education or consent materials (500-1000 word length ideal)

- Multiple LLM platforms (ChatGPT, Gemini, Claude) with API or web interface access

- Readability assessment tools (Flesch Reading Ease, FKGL, Gunning Fog, SMOG)

- Patient Education Materials Assessment Tool (PEMAT) for understandability scoring

Procedure:

- Baseline Assessment: Calculate baseline readability scores for all source materials using multiple validated indices.

- LLM Optimization: Prompt each LLM with: "Translate to a fifth-grade reading level" followed by the original text.

- Output Collection: Save all LLM-generated versions with timestamps and model version information.

- Readability Re-assessment: Calculate identical readability metrics for all LLM-generated versions.

- Accuracy Validation: Have two independent non-clinical team members review LLM outputs against originals for factual accuracy, flagging any discrepancies.

- Clinical Review: Have physician team members make final determinations on flagged inaccuracies.

- Understandability Assessment: Apply PEMAT scoring to original and optimized versions to ensure understandability is maintained or improved.

- Iterative Refinement: Use prompt engineering to address identified inaccuracies or understandability issues.

Validation Metrics:

- Statistical comparison of pre-post readability scores (Wilcoxon signed rank test)

- Accuracy preservation rates (target >95%)

- PEMAT understandability score maintenance or improvement

- Word count reduction percentage

Visualization Frameworks for Comprehension-Focused Consent

Comprehension Assessment Workflow

Multi-Stage Consent Protocol Development

| Tool/Resource | Function | Application Context |

|---|---|---|

| Readability Calculator | Analyzes text complexity using multiple validated metrics | Initial consent form assessment and modification tracking |

| Patient Education Materials Assessment Tool (PEMAT) | Assesses understandability and actionability of materials | Validating that simplified materials remain understandable and actionable |

| Large Language Models (GPT-4, Gemini, Claude) | Text simplification while (ideally) preserving meaning | Rapid generation of readability-optimized consent form variations |

| Flesch-Kincaid Grade Level | Estimates U.S. grade level required to understand text | Standardized readability metric recommended by NIH guidelines |

| Simple Measure of Gobbledygook (SMOG) Index | Assesses reading comprehension level needed | Highly effective readability predictor (r=0.79, sensitivity=0.89) [5] |

| Teach-back Method | Assesses patient understanding through explanation repetition | Direct evaluation of comprehension during consent process |

| Research Electronic Data Capture (REDCap) | Securely manages participant preference and comprehension data | Structured data collection for comprehension studies |

Adequate comprehension represents both an ethical imperative and emerging legal standard in informed consent. Quantitative evidence demonstrates significant gaps between disclosure and understanding, while validated protocols provide roadmap for systematic comprehension assessment. The integration of demographic analysis, readability optimization, and direct comprehension measurement enables researchers to move beyond signature collection to meaningful understanding. For the broader thesis on assessing patient comprehension, these application notes provide methodological frameworks for operationalizing comprehension as a measurable construct rather than assumed outcome, particularly crucial in complex domains like digital health research where technological complexities introduce novel comprehension challenges [3]. Future directions include developing standardized comprehension metrics across diverse populations and validating brief but sensitive comprehension assessment tools for routine use in both clinical and research contexts.

Application Notes and Protocols

Quantitative Evidence of Comprehension Deficits

Empirical studies consistently demonstrate that patient comprehension of fundamental informed consent components is critically low, undermining the ethical principle of autonomy in contemporary clinical practice [6].

Table 1: Patient Comprehension Levels of Specific Informed Consent Components [6]

| Informed Consent Component | Level of Patient Comprehension | Key Findings from Empirical Studies |

|---|---|---|

| Freedom to Withdraw | High (76-100% across studies) | Participants demonstrated highest understanding regarding voluntary participation and right to withdraw [6]. |

| Blinding | Moderate to High (58-89.7%) | Understanding excluded knowledge about investigators' blinding; concepts of placebo and randomization poorly understood [6]. |

| Voluntary Participation | Moderate to High (53-96.2%) | Recognized by majority of participants across multiple study populations [6]. |

| Risks and Side Effects | Low (6.9-87%) | Wide variability; only small minority demonstrated comprehension in several studies [6]. |

| Placebo Concepts | Low (64-65%) | Among least understood concepts alongside randomization [6]. |

| Randomization | Low (49.8%) | Comprehension was particularly low for this fundamental research concept [6]. |

Table 2: Effectiveness of Interventions to Improve Patient Comprehension in Informed Consent [7]

| Intervention Type | Number of Effective Interventions/Total Tested | Success Rate | Key Characteristics |

|---|---|---|---|

| Verbal Discussion with Test/Feedback | 3/3 | 100% | Included teach-back components and comprehension assessment [7]. |

| Interactive Digital | 11/13 | 85% | Used computer, tablet, or phone applications with interactive features [7]. |

| Multicomponent | 2/3 | 67% | Combined elements from multiple intervention categories [7]. |

| Audiovisual | 15/27 | 56% | Included videos, 3D models, and non-interactive digital content [7]. |

| Written | 6/14 | 43% | Simplified documents or supplementary written materials [7]. |

Experimental Protocols for Assessing Comprehension Deficits

Protocol 1: Comprehensive Comprehension Assessment

Objective: To quantitatively measure patient understanding of all key informed consent elements following standard consent processes.

Materials:

- Standardized informed consent document

- Validated comprehension assessment questionnaire

- Demographic data collection form

Procedure:

- Participant Recruitment: Recruit clinical trial participants or patients undergoing medical procedures (sample size: 29-1835 participants based on study requirements) [6].

- Informed Consent Process: Conduct standard informed consent process as per institutional protocols.

- Comprehension Assessment: Administer comprehension questionnaire within 24 hours of consent process, assessing:

- Understanding of risks, benefits, and alternatives

- Comprehension of randomization procedures

- Awareness of placebo concepts

- Knowledge of freedom to withdraw

- Understanding of voluntary participation

- Data Analysis: Calculate percentage of correct responses for each consent component. Categorize comprehension levels as high (>70%), moderate (50-70%), or low (<50%) [6].

Protocol 2: Intervention Efficacy Testing

Objective: To evaluate the effectiveness of various interventions in improving patient comprehension in informed consent.

Materials:

- Randomization schedule

- Intervention materials (based on type: digital, written, audiovisual, etc.)

- Control materials (standard consent process)

- Validated comprehension assessment tool

Procedure:

- Study Design: Randomized controlled trial or non-randomized controlled trial design [7].

- Participant Allocation: Randomly assign participants to intervention or control groups.

- Intervention Implementation:

- Interactive Digital Group: Utilize computer or tablet applications with interactive features allowing navigation through educational modules [7].

- Verbal with Teach-Back: Conduct consent discussion with test/feedback components where comprehension is assessed and information repeated based on understanding [7].

- Audiovisual Group: Implement video-based consent materials or 3-dimensional anatomical models [7].

- Written Group: Provide simplified consent documents or supplementary written materials [7].

- Control Group: Standard informed consent process.

- Outcome Measurement: Assess comprehension scores immediately after intervention and at delayed time points (>24 hours) [7].

- Statistical Analysis: Compare comprehension scores between intervention and control groups using appropriate statistical tests.

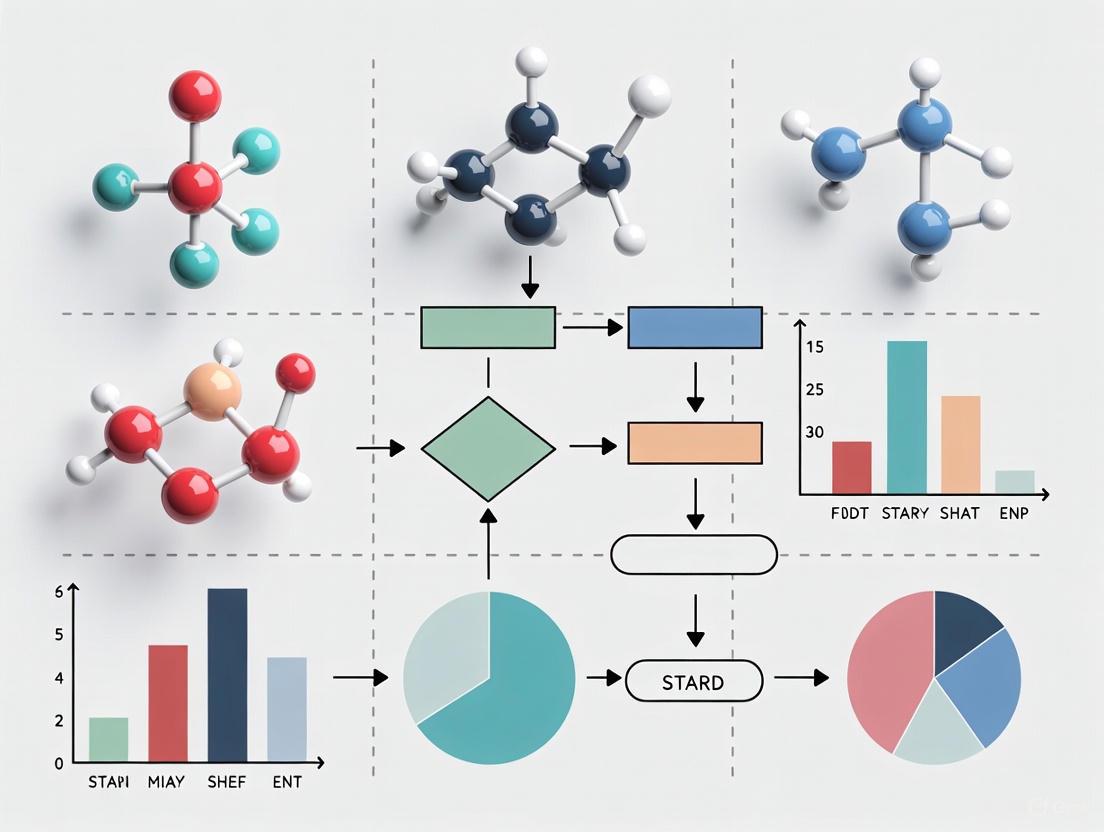

Research Workflow and Conceptual Framework

Research Workflow: This diagram illustrates the systematic approach to identifying, assessing, and addressing comprehension deficits in informed consent processes, moving from empirical observation to evidence-based protocols.

Conceptual Framework of Comprehension Deficits

Deficit Framework: This conceptual map illustrates the multifactorial nature of comprehension deficits in informed consent, showing contributing factors, specific manifestations, and evidence-based solutions.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Methods for Comprehension Deficit Research

| Research Tool | Function/Application | Protocol Specifications |

|---|---|---|

| Validated Comprehension Questionnaires | Quantitative assessment of understanding of specific consent components [6]. | Should cover risks, benefits, alternatives, randomization, placebo concepts, and voluntary participation. |

| Interactive Digital Platforms | Computer, tablet, or phone applications with interactive features to enhance engagement [7]. | Must include navigation controls, knowledge checks, and adaptive content delivery. |

| Teach-Back Protocol Guides | Standardized scripts for implementing teach-back methodology in consent discussions [7]. | Include specific prompts for assessing comprehension and structured feedback mechanisms. |

| Multi-Component Intervention Kits | Combined approaches using written, audiovisual, and interactive elements [7]. | Should be tailored to specific patient populations and clinical contexts. |

| Simplified Consent Documents | Consent forms written at appropriate literacy levels with enhanced visual design [7]. | Target reading level of 6th-8th grade; use clear headings and visual aids. |

| Audiovisual Consent Materials | Video recordings, 3D models, and visual aids to complement verbal explanations [7]. | Duration 5-15 minutes; include closed captioning; use realistic scenarios. |

Key Empirical Findings and Research Gaps

The evidence reveals significant disparities in comprehension across different elements of informed consent, with particularly poor understanding of fundamental research concepts like randomization and placebo effects [6]. Interactive interventions, especially those incorporating test/feedback or teach-back components, demonstrate superior efficacy in addressing these deficits [7]. Future research should prioritize vulnerable populations and explore the relative importance of different intervention components throughout development.

Application Note: The Scope of the Comprehension Gap

Informed consent is a cornerstone of ethical clinical research, based on the principle of patient autonomy. However, extensive empirical evidence reveals that participants' comprehension of key clinical trial concepts is critically low, creating significant ethical and practical challenges for researchers and drug development professionals.

Quantitative Assessment of Understanding Gaps

Table 1: Patient Comprehension Levels of Core Informed Consent Components

| Consent Component | Comprehension Range | Key Findings | Citations |

|---|---|---|---|

| Placebo Concepts | 4.8% - 65% | The lowest understanding among all components; one systematic review found only a small minority of patients demonstrated comprehension. | [8] [6] [9] |

| Randomization | 10% - 96% | Understanding is highly variable; a large meta-analysis found a pooled proportion of 39.4%; consistently identified as poorly understood. | [8] [6] [9] |

| Risks & Side Effects | 7% - 100% | Varies dramatically between studies; one review found only 20% of oncology patients could name a risk; understanding of uncertainty of benefits is particularly low. | [6] [7] [9] |

| Voluntary Participation | 21% - 96% | Generally higher understanding, though one study noted a significant disparity between urban (85%) and rural (21%) participants. | [8] [9] |

| Freedom to Withdraw | 63% - 100% | One of the best-understood components, though understanding of withdrawal consequences remains low (44%). | [8] [9] |

Implications for Drug Development

These comprehension gaps undermine the ethical validity of consent and pose significant challenges for clinical trial quality. Participants who do not understand randomization or placebos may exhibit non-adherence or drop-out if assigned to a control arm, potentially compromising trial integrity. Furthermore, the inability to comprehend risks challenges the fundamental principle of respect for persons in research ethics.

Experimental Protocol: Assessing Comprehension

Validated Assessment Methodology

Protocol Title: Quantitative Assessment of Informed Consent Comprehension in Clinical Trial Populations

Background: This protocol outlines a standardized method for evaluating patient understanding of randomization, risks, and placebo concepts during the informed consent process, based on empirically tested approaches.

Materials and Equipment:

- Informed Consent Comprehension Assessment Quiz (20-item true/false format)

- Data collection forms (electronic or paper)

- Multilingual versions as required by study population

- Visual aids for key concepts (optional, for enhanced understanding)

Procedure:

Quiz Administration Timing:

- Administer the comprehension quiz after the initial informed consent discussion but before trial enrollment.

- Implement repeat assessments at regular intervals (e.g., every 6 months) throughout long-term trials to evaluate knowledge retention.

Administration Conditions:

- Conduct in a quiet, private setting to minimize distractions.

- Offer the quiz in the participant's preferred language.

- Allow participants to refer to the consent form during assessment, if study design permits.

Scoring and Enrollment Criteria:

- Establish a predetermined passing score (e.g., ≥16/20 correct responses).

- Participants failing to meet the threshold should receive targeted education on misunderstood concepts and retake the quiz.

Data Analysis:

- Calculate pass rates for each quiz administration.

- Perform subgroup analyses based on education level, primary language, and clinical trial experience.

- Use multivariate regression to identify factors predictive of poor comprehension.

Validation Notes: This method was successfully implemented in a three-year HIV clinical trial in Botswana with 1,835 participants, demonstrating feasibility in large, international collaborations [10]. The re-administration of quizzes throughout the trial was found to reinforce key concepts and improve long-term understanding.

Conceptual Workflow for Comprehension Assessment

Diagram 1: Sequential workflow for assessing and improving patient comprehension throughout the clinical trial lifecycle. This cyclical process emphasizes ongoing education and protocol refinement based on participant understanding.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Informed Consent Comprehension Research

| Tool Category | Specific Examples | Function & Application | Evidence Base |

|---|---|---|---|

| Assessment Metrics | Quality of Informed Consent (QuIC) survey; 20-item true/false quizzes; Multiple-choice questionnaires | Quantitatively measure understanding of specific consent components; Enable standardized evaluation across populations | [6] [10] [9] |

| Enhanced Consent Tools | Visual aids with simple graphics; Pictorial information sheets; Laminated visual timelines | Improve comprehension in low-literacy populations; Communicate complex concepts (randomization, placebo) visually | [11] [12] |

| Interactive Digital Platforms | Computer/tablet applications with interactive features; Navigable educational modules | Actively engage patients in learning process; Allow self-paced review of complex concepts | [7] |

| Low-Literacy Communication Aids | Consent forms at <8th grade reading level; Teach-back techniques; Simplified sentence structure | Ensure accessibility for participants with varying literacy levels; Confirm understanding through participant explanation | [11] [7] |

| Multilingual Resources | Translated consent forms; Bilingual data collectors; Culturally adapted visual aids | Address language barriers; Ensure accurate comprehension across diverse populations | [11] [10] |

Experimental Protocol: Implementing Enhanced Consent Processes

Multicomponent Intervention Strategy

Protocol Title: Enhanced Informed Consent Process for Low-Literacy and Vulnerable Populations

Background: This protocol implements a theory-based, multicomponent approach to improve understanding of randomization, risks, and placebo concepts, specifically designed for populations with limited health literacy.

Materials and Equipment:

- Simplified consent forms (8th grade reading level or lower)

- Visual aids developed with graphic design input

- Standardized explanation guide for consent administrators

- Training materials for data collectors

- Space with minimal distractions for consent discussions

Procedure:

Staff Training and Certification:

- Conduct 4-hour training sessions on low-health-literacy communication techniques.

- Include 4-6 hours of mock-consent practice sessions.

- Implement certification process with study coordinator before staff interact with participants.

Pre-Consent Preparation:

- Arrange environment to minimize distractions (turn off television, provide child supervision).

- Confirm participant's language preference and provide appropriate materials.

Enhanced Consent Process:

- Begin with open-ended question to assess initial understanding ("What interested you about this study?").

- Use visual aids to explain key concepts: timeline graphics for study duration, simple graphics for randomization, placebo, and risks.

- Employ low-literacy communication techniques: avoid jargon, speak slowly, maintain eye contact, periodically check for understanding.

- Utilize teach-back method: ask participants to explain concepts in their own words.

Ongoing Reinforcement:

- Reinforce key concepts at each study visit, particularly before procedures.

- Use visual aids consistently throughout study participation.

- Implement brief comprehension checks at regular intervals.

Implementation Notes: This multicomponent approach was successfully implemented in pediatric obesity trials (NET-Works and GROW) with underserved populations, demonstrating improved comprehension of complex trial concepts [11]. The combination of simplified text, visual aids, and interactive teach-back methods addresses multiple learning styles and literacy levels.

Intervention Efficacy Framework

Diagram 2: Evidence-based intervention strategies and their relative effectiveness in addressing comprehension gaps. Interactive methods with test/feedback components demonstrate superior efficacy compared to standard approaches.

The evidence consistently demonstrates critical gaps in patient understanding of randomization, risks, and placebo concepts in clinical trials. These deficiencies challenge the ethical foundation of informed consent and may impact trial integrity. However, validated assessment protocols and enhanced consent processes—particularly interactive, multimodal approaches—show significant promise in bridging these comprehension gaps.

Future research should prioritize standardized assessment metrics, explore culturally adapted interventions for diverse populations, and investigate the longitudinal impact of improved comprehension on trial retention and adherence. Integrating these evidence-based strategies into routine practice is essential for maintaining the ethical viability of contemporary clinical research.

Variability in Comprehension Across Medical Specialties and Patient Populations

Application Notes: The Current Evidence Base

The Fundamental Comprehension Deficit

Systematic reviews of patient comprehension in informed consent reveal a fundamental deficit in patient understanding. Research indicates that participants' comprehension of core informed consent components is consistently low across medical specialties, undermining the ethical principle of autonomy in clinical practice [13]. Studies show that while understanding of voluntary participation and the right to withdraw is relatively higher (often exceeding 50-63%), comprehension of more complex concepts like randomization (as low as 10-96% across studies), placebo concepts (13-97%), and specific risks and benefits can be remarkably poor, with some studies reporting only 7% of patients understanding risks associated with clinical trial participation [13].

Variation Across Specialty Contexts

The clinical context and specialty type significantly influence comprehension levels. Available evidence suggests notable differences:

- Oncology: Some studies report >90% understanding of study purpose but variable understanding of risks and alternatives [13].

- Infectious Disease/Vaccine Trials: Comprise approximately 42% of informed consent comprehension research [13].

- Rheumatology vs. Ophthalmology: Demonstrated significant specialty-specific variation in understanding placebo concepts, with rheumatology participants showing higher comprehension (49%) compared to ophthalmology groups (13%) [13].

Effective Intervention Strategies

Recent evidence categorizes and evaluates intervention effectiveness for improving comprehension:

Table 1: Effectiveness of Comprehension Intervention Types

| Intervention Category | Statistically Significant Improvement | Key Characteristics |

|---|---|---|

| Verbal Discussion with Test/Feedback or Teach-Back | 100% (3/3 studies) | Includes explicit assessment of comprehension domains with repeated information based on understanding [7] |

| Interactive Digital Interventions | 85% (11/13 studies) | Computer, tablet, or phone applications with interactive features [7] |

| Multicomponent Interventions | 67% (2/3 studies) | Combines multiple delivery methods (written, audiovisual, verbal) [7] |

| Audiovisual Interventions | 56% (15/27 studies) | Videos, 3-dimensional models, audio/video recordings [7] |

| Written Interventions | 43% (6/14 studies) | Simplified documents, supplemental materials with limited graphics [7] |

Interactive interventions that incorporate test/feedback or teach-back components demonstrate particularly strong effects, suggesting that active assessment and correction of misunderstandings is crucial [7].

Document Readability and Comprehensibility

The technical presentation of consent materials directly impacts comprehension. Analysis of cardiovascular disease patient education materials from leading national organizations reveals that most materials exceed recommended readability levels, with mean Flesch Kincaid Grade Level of 10.0 ± 1.3 (goal = grade 7) and Flesch Kincaid Readability Ease of 54.9 ± 6.8 (goal >70, equating to "fairly difficult to read") [14]. Comparative analysis shows significant differences between organizations, with one major heart association's materials being "significantly more difficult to read and comprehend, were longer, and had more complex words" than another cardiovascular organization's materials [14].

Experimental Protocols

Protocol 1: Comparative Comprehension Assessment Across Specialties

Purpose

To quantitatively assess and compare patient comprehension of informed consent elements across multiple medical specialties and identify specialty-specific comprehension patterns.

Materials and Reagents

Table 2: Research Reagent Solutions for Comprehension Assessment

| Item | Function | Specifications |

|---|---|---|

| Validated Comprehension Assessment Questionnaire | Measures understanding of core consent elements | 15-item multiple choice format; covers risks, benefits, alternatives, voluntary nature, procedures [15] |

| Standardized Consent Form Template | Ensures consistency in information presentation | Adjusted to specialty context; 4-5 page target length; Flesch-Kincaid Reading Level ≤8.0 [15] |

| Demographic and Health Literacy Assessment Tool | Characterizes participant population and moderating variables | Collects age, education, health literacy (e.g., REALM or NVS), prior research experience [7] |

| Secure Data Collection Platform | Maintains data integrity and confidentiality | Electronic survey system with encrypted data storage; REDCap or equivalent [15] |

Procedure

- Participant Recruitment: Recruit consecutive patients or research participants from at least three distinct clinical specialties (e.g., oncology, cardiology, rheumatology) who have completed the informed consent process for a procedure or clinical trial.

- Randomization: Randomize participants by visit date to receive either standard consent forms or experimental concise forms (if testing form length effects).

- Consent Process: Administer the standardized consent process specific to each clinical context.

- Comprehension Assessment: Immediately after consent discussion (within 1 hour), administer the comprehension assessment questionnaire without reference to consent documents.

- Data Collection: Record comprehension scores overall and by domain (risks, benefits, alternatives, procedures), plus demographic and health literacy data.

- Analysis: Calculate total comprehension scores (0-15) and domain-specific scores. Use two-sample t-tests for continuous variables and Fisher's exact tests for categorical variables. Perform multivariate regression to identify predictors of comprehension.

Specialized Considerations

- Timing: Assessment should occur within 1 hour of consent process to test immediate recall [7].

- Blinding: Research personnel administering assessments should be blinded to intervention group when testing different consent formats.

- Specialty Matching: Ensure comparable complexity of procedures across specialties when making cross-specialty comparisons.

Protocol 2: Intervention Efficacy Testing for Comprehension Improvement

Purpose

To develop and test the efficacy of targeted interventions for improving patient comprehension in informed consent across diverse patient populations.

Procedure

- Intervention Development: Create interventions across the five categories identified in Table 1 (written, audiovisual, interactive digital, multicomponent, and teach-back).

- Participant Stratification: Stratify participants by health literacy level, education, and preferred language to ensure diverse representation.

- Randomization: Randomize participants to receive either standard consent process or enhanced intervention.

- Baseline Assessment: Administer demographic and health literacy assessment.

- Intervention Delivery: Implement the assigned consent intervention with standardized timing and delivery method.

- Comprehension Measurement: Use the same validated comprehension assessment across all groups to enable direct comparison.

- Secondary Measures: Assess participant satisfaction, anxiety, and perceived understanding using Likert scales.

- Data Analysis: Compare comprehension scores between intervention and control groups using appropriate statistical tests (t-tests, ANOVA). Conduct subgroup analysis by health literacy level and education.

The experimental workflow for this protocol is illustrated below:

Protocol 3: Readability and Comprehensibility Optimization

Purpose

To systematically evaluate and optimize the readability and comprehensibility of informed consent documents and patient education materials.

Procedure

- Material Collection: Gather current informed consent documents and patient education materials from targeted clinical specialties.

- Readability Assessment: Calculate readability metrics using established formulas:

- Flesch Kincaid Readability Ease (FKRE)

- Flesch Kincaid Grade Level (FKGL)

- Simple Measure of Gobbledygook (SMOG)

- Gunning Fog Score (GFS)

- Content Analysis: Evaluate content for inclusion of all essential elements (risks, benefits, alternatives, procedures) and identify areas of excessive complexity or missing information.

- Material Revision: Revise materials to achieve target readability levels (FKRE >70, FKGL ≤7) while maintaining all essential content.

- Comprehension Testing: Test original and revised materials with representative patient populations using standardized comprehension assessments.

- Iterative Refinement: Use patient feedback to further refine materials until comprehension targets are achieved.

The relationship between readability metrics and comprehension outcomes can be visualized as follows:

The Scientist's Toolkit: Essential Research Reagents

Table 3: Essential Research Reagents for Comprehension Studies

| Reagent/Tool | Function | Implementation Specifications |

|---|---|---|

| Validated Comprehension Questionnaire | Primary outcome measurement | Must cover all core consent elements: risks, benefits, alternatives, procedures, voluntary nature; 15-item multiple choice format recommended [15] |

| Health Literacy Assessment Tools | Characterizes participant capability | REALM (Rapid Estimate of Adult Literacy in Medicine) or NVS (Newest Vital Sign) for efficient screening [7] |

| Readability Analysis Software | Quantifies document complexity | Automated tools integrated with word processors; calculate FKRE, FKGL, and other metrics [14] |

| Standardized Consent Templates | Ensures consistency across groups | Target length 4-5 pages; reading level ≤8th grade; eliminates repetition and unnecessary detail [15] |

| Interactive Digital Platforms | Delivery of enhanced consent interventions | Tablet or computer-based systems with interactive features; allow navigation through educational modules [7] |

| Multidimensional Assessment Battery | Captures secondary outcomes | Measures satisfaction, anxiety, perceived understanding; uses Likert scales and standardized instruments [7] |

These protocols and tools provide a comprehensive framework for investigating the variability in comprehension across medical specialties and patient populations, with particular relevance to informed consent research in clinical trials and therapeutic interventions.

The Impact of Inadequate Comprehension on Research Integrity and Patient Autonomy

Application Notes: Quantifying the Comprehension Gap in Informed Consent

Informed consent (IC) serves as a foundational pillar of ethical clinical research, ensuring patient autonomy and protecting research integrity. However, empirical evidence consistently reveals significant gaps in participant comprehension, which can undermine these ethical objectives. The following data synthesizes key findings from recent studies investigating comprehension levels and the efficacy of interventions designed to address them.

| Participant Group | Sample Size (n) | Mean Objective Comprehension Score (%) | Comprehension Classification | Satisfaction Rate (%) |

|---|---|---|---|---|

| Minors (12-13 years) | 620 | 83.3 (SD 13.5) | Adequate | 97.4 |

| Pregnant Women | 312 | 82.2 (SD 11.0) | Adequate | 97.1 |

| Adults (Millennials & Gen X) | 825 | 84.8 (SD 10.8) | High | 97.5 |

Key Findings: A 2025 cross-sectional study demonstrated that electronic Informed Consent (eIC) materials developed following i-CONSENT guidelines—using co-creation and multi-format presentation (layered web content, narrative videos, infographics)—achieved high comprehension and satisfaction across diverse populations in Spain, the UK, and Romania [16]. This suggests that tailored, participant-centric approaches can effectively uphold patient autonomy. Furthermore, demographic factors influenced outcomes; women/girls consistently outperformed men/boys, and prior participation in a clinical trial was unexpectedly associated with lower comprehension scores, indicating a need for tailored engagement strategies for returning participants [16].

| Disease Site | Number of IC Forms Analyzed | Mean Reading Grade Level |

|---|---|---|

| All Gynecologic Cancers | 103 | 13.0 |

| Ovarian Cancer | 41 | 13.0 |

| Endometrial Cancer | 21 | 12.0 |

| Cervical Cancer | 14 | 12.9 |

| Vulvar/Vaginal Cancer | 3 | 12.8 |

Key Findings: A 2025 retrospective analysis revealed a critical barrier to comprehension and enrollment: informed consent forms for gynecologic oncology trials consistently required a mean 13th-grade (college-level) reading ability [17]. This far exceeds the American Medical Association and National Institutes of Health recommendations that patient materials should be written at a 6th- to 8th-grade level [17]. This complexity creates a significant disparity, as patients with limited English proficiency are significantly less likely to enroll in clinical trials, thereby threatening both the integrity of research through unrepresentative samples and the autonomy of underserved patients [17].

| Consent Form Type | Total Pages | Total Word Count | Flesch-Kincaid Reading Grade Level | Resulting Comprehension |

|---|---|---|---|---|

| Standard Form | 14 | 5,716 | 8.9 | No significant difference vs. concise form |

| Concise Form | 4 | 2,153 | 8.0 | No significant difference vs. standard form |

Key Findings: A study comparing standard and concise consent forms in a Phase I bioequivalence study found that reducing length and complexity (by eliminating repetition and unnecessary detail) did not significantly impact comprehension scores among healthy volunteers [15]. This indicates that while readability is necessary, it alone may not be sufficient to guarantee understanding, and other factors like presentation format and participant engagement are critical.

Experimental Protocols

Objective: To develop and evaluate the effectiveness of electronic informed consent (eIC) materials in improving comprehension and satisfaction among minors, pregnant women, and adults in a multinational context.

Workflow Overview:

Detailed Methodology:

- Material Elaboration (Steps 1-3):

- Guidelines: eIC materials are developed following the i-CONSENT guidelines to ensure comprehensibility and accessibility [16].

- Co-Creation: A multidisciplinary team (physicians, epidemiologists, sociologists) collaborates with the target population (e.g., minors, pregnant women) through design thinking sessions and surveys to ensure materials are relevant and engaging [16].

- Multimodal Formats: Materials are presented in multiple, accessible formats on a digital platform, including:

- Layered web content for accessing additional details.

- Narrative videos (e.g., storytelling for minors).

- Printable documents with integrated images.

- Customized infographics for complex topics [16].

- Cross-Cultural Implementation (Step 4): Materials are professionally translated (e.g., to English, Romanian) with attention to contextual appropriateness and local customs [16].

- Study Execution (Steps 5-7):

- Recruitment: A cross-sectional study design is used to recruit participants from the target groups (e.g., 620 minors, 312 pregnant women, 825 adults) across multiple countries [16].

- Delivery: Participants review the eIC materials via a digital platform, self-selecting their preferred format(s) [16].

- Assessment: Comprehension is measured using an adapted Quality of the Informed Consent (QuIC) questionnaire, which includes:

- Part A: Objective comprehension (multiple-choice, scored as low <70%, moderate 70-80%, adequate 80-90%, high ≥90%).

- Part B: Subjective comprehension (5-point Likert scale).

- Satisfaction & Usability: Measured via Likert scales and specific usability questions, with scores ≥80% deemed acceptable [16].

- Data Analysis: Multivariable regression models are applied to identify demographic and experiential predictors of comprehension (e.g., gender, age, prior trial experience) [16].

Objective: To quantitatively evaluate the readability of traditional Informed Consent (IC) forms for gynecologic cancer clinical trials against national recommended standards.

Workflow Overview:

Detailed Methodology:

- Sample Collection: Conduct a retrospective analysis of all informed consent forms from gynecologic cancer clinical trials opened at an institution over a defined period (e.g., 5 years). Data extracted includes cancer type and trial sponsor [17].

- Text Preparation: The text from the IC forms is prepared for analysis by removing all identifying information (e.g., institution names, principal investigator names) and non-prose elements (e.g., checkboxes, signatures) that could skew readability metrics [17].

- Readability Analysis: The prepared text is analyzed using specialized readability software (e.g., Readability Studio Professional Edition). The software runs multiple standardized readability tests [17].

- Benchmarking: The mean reading grade level from all tests is calculated for the entire cohort and stratified by disease site and sponsor. This result is compared against the recommended benchmark (6th-8th grade level) set by the AMA and NIH to quantify the gap [17].

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Tools for Informed Consent Comprehension Research

| Tool / Reagent | Function / Application in IC Research |

|---|---|

| Adapted QuIC Questionnaire | A validated survey instrument tailored to specific trials and populations to quantitatively measure both objective and subjective comprehension [16]. |

| Readability Analysis Software | Specialized software (e.g., Readability Studio) that applies multiple standardized algorithms (e.g., Flesch-Kincaid) to calculate the grade level required to understand a text [17]. |

| Digital Consent Platform | A web-based system capable of delivering multi-format eIC materials (layered text, video, infographics) and capturing participant interaction data and responses [16]. |

| Co-Design Framework | A structured methodology (e.g., Design Thinking sessions) for involving patients, including vulnerable groups like minors and pregnant women, in the creation of IC materials to improve clarity and relevance [16]. |

| Color Contrast Checker | A tool (e.g., WebAIM's) to ensure that all text and graphical elements in digital and print materials meet WCAG minimum contrast ratios (4.5:1 for standard text), guaranteeing accessibility for users with low vision or color blindness [18] [19] [20]. |

Assessment Tools and Techniques: Measuring and Improving Comprehension in Clinical Settings

Informed consent is a cornerstone of ethical clinical research and patient care, representing more than a signature on a document but rather a process of understanding and autonomous decision-making. The quality of this process directly impacts patient autonomy, research integrity, and ultimately, health outcomes. Within the broader thesis of assessing patient comprehension in informed consent research, the deployment of validated assessment instruments is critical for generating reliable, comparable data. This article provides a detailed overview of key quantitative tools, their application protocols, and the essential reagents that form the researcher's toolkit for rigorously evaluating the informed consent process.

Key Validated Assessment Instruments

The following table summarizes core instruments used to measure comprehension, decision-making, and contextual factors in informed consent research.

Table 1: Validated Instruments for Assessing Informed Consent Comprehension and Quality

| Instrument Name | Primary Construct Measured | Key Domains/Description | Example Context of Use |

|---|---|---|---|

| Quality of Informed Consent (QuIC) [21] [22] | Comprehension of Consent | Part A: Objective knowledge of study details (14 items).Part B: Perceived understanding (6 items).Maximum score: 80. | Used in a 2025 RCT to show equivalent comprehension between teleconsent and in-person consent (Mean scores not significantly different, P=0.29 for Part A and P=0.25 for Part B) [21]. |

| Decision-Making Control Instrument (DMCI) [21] [22] | Perceived Autonomy & Trust | Voluntariness, trust, decision self-efficacy (15 items).Maximum score: 30; higher scores indicate greater perceived autonomy. | Demonstrated no significant difference in perceived voluntariness between consent modalities (P=0.38) [21]. |

| Process & Quality of Informed Consent (P-QIC) [23] | Observed Consent Encounter Quality | Observational tool rating essential elements of information (e.g., risks, benefits) and communication (e.g., checking for understanding, using plain language). | Used in simulated and actual consent encounters to quantitatively identify strengths and weaknesses in the consent process [23]. |

| ComprehENotes [24] | Electronic Health Record (EHR) Note Comprehension | A 55-item test (with a 14-item short form) developed using Sentence Verification Technique (SVT) to assess a patient's ability to understand their own EHR clinical notes. | The first instrument specifically designed to measure EHR note comprehension, a key component of patient-facing research platforms and portals [24]. |

| Informed Consent Document Abstraction Tool [25] | Quality of Consent Documents | Checklist of 8 key elements defining a minimum standard for documents, including procedure-specific risks, benefits, and alternatives. | Developed for and used in a national cross-sectional study to evaluate the quality of consent forms for elective procedures [25]. |

Experimental Protocol: Comparing Consent Modalities

The following protocol is adapted from a recent randomized controlled trial (RCT) comparing telehealth and in-person informed consent [21] [22].

Objective

To evaluate the effectiveness of teleconsent versus traditional in-person consent by comparing participant comprehension and perceived decision-making quality.

Materials and Reagents

- Consent Documents: The study-specific informed consent form (ICF).

- Telehealth Platform: A secure, HIPAA-compliant video conferencing tool (e.g., Doxy.me) with screen-sharing and electronic signature capabilities [21].

- Assessment Instruments:

- Data Management System: A secure database (e.g., REDCap) for data entry and management.

Step-by-Step Methodology

- Participant Recruitment & Screening: Identify potential participants through institutional recruitment platforms or clinical registries. Contact individuals to assess eligibility and collect basic demographic information [21].

- Randomization: Randomly assign eligible participants to either the teleconsent or the in-person consent group.

- Consent Process:

- Teleconsent Group: Conduct the consent session via the telehealth platform. The researcher shares the ICF on screen, reviews it collaboratively with the participant, and obtains a live, electronic signature. Verify identity by requiring video and using a timestamped screenshot feature [22].

- In-Person Group: Conduct the consent session in a private office. Provide a physical copy of the ICF for review and signature.

- Baseline Data Collection: Immediately following the consent session, administer the QuIC, DMCI, and SAHL-E surveys to all participants.

- Follow-Up Data Collection: Re-administer the QuIC and DMCI surveys to all participants 30 days after the initial consent session to assess knowledge retention and longitudinal perceptions [22].

- Data Analysis:

- Use appropriate statistical tests (e.g., t-tests, ANOVA) to compare mean scores on the QuIC and DMCI between the teleconsent and in-person groups at both baseline and follow-up.

- Control for potential confounding variables, such as baseline health literacy (SAHL-E score), in the analysis.

Figure 1: Experimental workflow for comparing consent modalities, from recruitment to data analysis.

The Scientist's Toolkit: Essential Research Reagents

For researchers designing studies in this field, the following tools and resources are indispensable.

Table 2: Essential Reagents for Informed Consent Assessment Research

| Tool/Resource | Category | Function & Application |

|---|---|---|

| QuIC & DMCI Surveys [21] [22] | Validated Questionnaires | Quantify participant comprehension and perceived autonomy/trust. The core dependent variables for many study designs. |

| Health Literacy Tool Shed [26] [27] | Online Database | An online, curated database of health literacy measures to help researchers select the most appropriate instrument for their study population and goals. |

| P-QIC Tool [23] | Observational Checklist | Allows for the direct, quantitative assessment of the consent process (both information and communication quality) as it occurs, either live or via recording. |

| Readability Analyzer (e.g., SMOG, Readability Studio) [26] [17] | Software/Formula | Assesses the reading grade level of informed consent documents. Critical for ensuring materials meet the recommended 6th-8th grade level, as studies show forms often exceed this (e.g., mean grade level of 13th found in one study) [17]. |

| Sentence Verification Technique (SVT) [24] | Methodology | A procedure for generating reliable reading comprehension questions from a source text (e.g., an EHR note or consent form), used in developing instruments like ComprehENotes. |

Assessing the Quality of Informed Consent Documents

Beyond assessing the process with participants, evaluating the quality of the consent document itself is a critical step. The abstraction tool developed by Yale–New Haven Health Center for Outcomes Research and Evaluation provides a validated framework for this [25].

Protocol for Document Quality Assessment

- Document Collection: Obtain a representative sample of informed consent documents for elective procedures from the target institution(s).

- Rater Training: Train at least two independent raters using the tool's manual to ensure consistent application of the criteria.

- Abstraction Process: Raters review each document against the 8-item checklist, which captures the presence or absence of key elements. Key domains include [25]:

- Content: Procedure-specific benefits, material risks, and alternatives to the procedure.

- Presentation: Use of clear language and readability.

- Timing: Evidence that the document was provided to the patient in advance of the procedure.

- Analysis: Calculate the percentage of documents that meet each of the 8 criteria. Inter-rater reliability can be calculated (e.g., item-level agreement of 92%-100% was achieved in the original study) [25].

Figure 2: Logical framework for assessing informed consent document quality across three core domains.

The rigorous assessment of patient comprehension in informed consent is achievable through a suite of specialized, validated instruments. As evidenced by recent research, these tools are vital for evaluating emerging practices like teleconsent, demonstrating that digital solutions can maintain standards of understanding and ethical engagement while improving accessibility [21]. The consistent application of tools like the QuIC, DMCI, P-QIC, and document abstraction checkbooks enables the generation of high-quality, comparable data. This empirical approach is fundamental to refining the informed consent process, upholding the principle of patient autonomy, and ensuring that the conduct of clinical research remains both scientifically and ethically sound.

Within the domain of informed consent research, ensuring genuine patient comprehension of information presented in clinical trials remains a significant challenge. Empirical studies consistently reveal that a substantial proportion of clinical trial participants demonstrate limited understanding of core consent components, including concepts of randomisation, placebo, and potential risks [13]. Often, patients remain confused about their healthcare plans after discharge, and most do not recognize their own lack of comprehension [28]. The teach-back method emerges as a robust, evidence-based technique to verify understanding actively. It is a structured communication process where patients are asked to repeat in their own words the information and instructions just conveyed by their healthcare provider [29]. This method serves as a practical and verifiable tool for researchers and clinicians aiming to uphold the ethical principle of autonomy by ensuring that consent is not merely obtained, but truly understood.

Quantitative Evidence of Effectiveness

The effectiveness of the teach-back method is supported by a body of empirical research across diverse clinical settings. The tables below synthesize key quantitative findings, highlighting its impact on comprehension, recall, and clinical outcomes.

Table 1: Impact of Teach-Back on Patient Comprehension and Knowledge

| Outcome Measure | Study Design/Setting | Results | Citation |

|---|---|---|---|

| Immediate Recall & Comprehension | Prospective cohort study, Emergency Department (ED) | Patients receiving teach-back had significantly higher scores on knowledge of diagnosis (p<0.001) and follow-up instructions (p=0.03). The proportion with a comprehension deficit dropped from 49% to 11.9%. | [30] |

| Short-Term Knowledge Retention | Prospective cohort study, ED (2-4 day follow-up) | The teach-back group maintained higher comprehension scores on three out of four domains. The mean score increase was 6.3% versus 4.5% in the control group. | [30] |

| Disease-Specific Knowledge | Systematic Review | In multiple studies, participants answered most questions correctly after interventions that included teach-back. Knowledge improvement was not always statistically significant at long-term follow-up. | [28] |

| Medication Comprehension | Controlled Trial, ED | Patients with limited health literacy who received teach-back scored higher on medication comprehension compared to standard discharge. | [28] |

Table 2: Impact of Teach-Back on Health Services Outcomes

| Outcome Measure | Study Design/Setting | Results | Citation |

|---|---|---|---|

| Hospital Readmissions | Pre-post intervention study (Coronary Artery Bypass Grafting patients) | 30-day readmission rates decreased from 25% pre-intervention to 12% post-intervention (p=0.02) after implementing teach-back. | [28] [29] |

| Hospital Readmissions | Pre-post intervention study (Heart Failure patients) | Readmission rates at 12 months improved from 59% in the non-teach-back group to 44% in the teach-back group (p=0.005). | [28] |

| Patient Satisfaction | Systematic Review | Six out of ten studies examining patient satisfaction, including HCAHPS survey scores, indicated improved satisfaction with medication education, discharge information, and health management. | [28] |

Application Notes and Protocols for Research Settings

Integrating the teach-back method into informed consent processes and clinical trial protocols requires a structured approach to ensure fidelity and consistency. The following protocols provide a framework for implementation.

Core Protocol: Implementing the 5Ts of Teach-Back

The 5Ts framework (Triage, Tools, Take Responsibility, Tell Me, Try Again) offers a standardized protocol for executing teach-back effectively [31].

- Triage: Identify the one to three most critical concepts that the patient must understand and remember from the interaction. In an informed consent context, this could include the purpose of the research, the concept of randomisation, or key potential risks. Use a "chunk and check" approach, delivering one chunk of information before checking for understanding [31].

- Tools: Employ aids to assist in providing a clear explanation. This may include reader-friendly consent forms, simple diagrams illustrating the trial design, pill charts for medication schedules, or anatomical models. The choice of tool should be tailored to the individual's needs and the complexity of the information [31].

- Take Responsibility: Use a non-shaming approach to ask for the teach-back. The researcher assumes responsibility for the clarity of the explanation using phrases such as, "I want to be sure I explained that clearly. Could you please explain it back to me in your own words so I know I did a good job?" [32] [31].

- Tell Me: Ask the patient or research participant to state in their own words what they understood. The ask must be specific. For example, "In your own words, can you tell me what you would do if you experienced side effect X?" or "What will you tell your family about this clinical trial?" Avoid questions that can be answered with a simple "yes" or "no" [29] [31].

- Try Again: If the participant is unable to explain the concept correctly or completely, the researcher must re-explain the information using alternate, plain-language words or different tools. This cycle of re-explanation and re-checking continues until the participant demonstrates accurate understanding [31].

Experimental Protocol: Assessing Comprehension in Informed Consent

This protocol is adapted from empirical studies on consent comprehension and teach-back efficacy [13] [30].

- Objective: To quantitatively assess the impact of the teach-back method on participants' immediate and short-term comprehension of key informed consent components.

- Study Design: Prospective cohort study or randomized controlled trial comparing standard consent process (control) versus consent process augmented with teach-back (intervention).

- Population: Adult participants (or guardians for pediatric studies) eligible for a clinical trial.

- Intervention Arm:

- The researcher delivers the consent information using standard procedures.

- The researcher then implements the 5Ts teach-back protocol for the pre-specified key concepts (e.g., purpose, randomisation, risks, right to withdraw).

- Control Arm: The researcher delivers the consent information using standard procedures without a structured teach-back.

- Outcome Measurement:

- Immediate Assessment: Conducted immediately after the consent process. A researcher not involved in the consent session administers a standardized questionnaire.

- Delayed Assessment: Conducted 2-7 days later via a telephone follow-up by a blinded researcher, using the same questionnaire.

- Assessment Tool: A questionnaire designed with multiple-choice or true/false items targeting comprehension of specific elements:

- Research purpose and nature.

- Voluntary participation and freedom to withdraw.

- Concept of randomisation.

- Use of placebo (if applicable).

- Potential risks and benefits.

- Safety procedures and emergency contacts.

- Data Analysis: Compare mean comprehension scores and the proportion of participants with correct understanding for each component between the intervention and control groups at both time points, using appropriate statistical tests (e.g., t-tests, chi-square).

Implementation Strategies for Research Teams

Sustained implementation requires more than individual training; it demands system-level support [33].

- Training and Education of Stakeholders: Conduct interactive, multimodal training sessions for all research staff (investigators, clinical trial coordinators, nurses) involved in the consent process. Training should include didactic instruction, demonstration videos, and role-playing scenarios specific to clinical trial contexts [34] [33].

- Support for Clinicians: Implement ongoing support mechanisms such as refresher courses, coaching, and access to health literacy experts. Anderson et al. found that a single training session was insufficient for lasting mastery, highlighting the need for reinforced learning [34] [33].

- Audit and Feedback: Integrate teach-back fidelity checks into routine monitoring activities. A designated team member can observe consent sessions (with participant permission) and provide constructive feedback to staff on their use of the technique. Tracking the use of teach-back through logs can also be beneficial [33].

The Scientist's Toolkit: Essential Reagents for Research

Table 3: Essential Materials and Tools for Implementing Teach-Back in Research

| Item/Tool | Function/Description | Application in Research Context |

|---|---|---|

| Simplified Consent Form | A version of the informed consent form written at a 6th-8th grade reading level, using plain language and short sentences. | Serves as the primary "Tool" for explanation. Improves baseline understanding before teach-back is initiated. [35] |

| Visual Aids & Diagrams | Illustrations of the trial design, randomisation process, or schedule of procedures. | Helps explain complex concepts like randomisation and blinding visually, making abstract ideas more concrete. [31] |

| Standardized Comprehension Assessment | A validated questionnaire (e.g., Quality of Informed Consent - QuIC) or a study-specific quiz. | Provides quantitative data on understanding of key consent components for outcome measurement. [13] |

| Teach-Back Evaluation & Tracking Log | A structured form for self-assessment or peer observation of teach-back performance. | Allows for monitoring fidelity to the protocol and quality improvement of the consent process. [29] |

| Role-Play Scenarios | Scripted examples of consent conversations for common trial types, including challenging questions. | Used for training and competency assessment of research staff in practicing the 5Ts. [34] |

Interactive Interventions and Multimedia Approaches to Enhance Patient Engagement

Within the critical framework of assessing patient comprehension in informed consent research, traditional paper-based consent forms are increasingly recognized as insufficient. Persistent comprehension gaps undermine the ethical principle of autonomy, potentially affecting both participant welfare and study validity. This document outlines evidence-based application notes and detailed protocols for implementing interactive and multimedia interventions designed to enhance patient engagement and understanding during the informed consent process. The strategies detailed herein are synthesized from current literature and empirical studies, providing researchers, scientists, and drug development professionals with practical methodologies to improve participant comprehension.

Current Evidence and Quantitative Data Synthesis

Recent systematic reviews and cross-sectional evaluations provide strong evidence for the efficacy of digital and multimedia tools in improving comprehension and satisfaction during the consent process for clinical research.

Table 1: Summary of Evidence on Digital and Multimedia Consent Interventions

| Study / Review Focus | Intervention Type | Key Findings on Comprehension | Key Findings on Satisfaction & Engagement |

|---|---|---|---|

| Digital Informed Consent (eIC) Evaluation (Fons-Martinez et al., 2025) [36] | Multimodal eIC (layered web content, narrative videos, infographics) | Mean objective comprehension scores >80% across all groups (Minors: 83.3%; Pregnant Women: 82.2%; Adults: 84.8%) [36] | Satisfaction rates exceeded 90% across all participant groups; format preferences varied (minors & pregnant women preferred videos, adults preferred text) [36] |

| Interventions for Research Decision Making (Systematic Review) [37] | Decision aids and communication tools (digital and print) | Interventions generally increased participant knowledge; little to no effect on actual trial participation rates [37] | Tools were found to be acceptable and useful for supporting decision-making [37] |

| AI for Consent Material Simplification (Waters, 2025) [38] | AI (LLM/GPT-4) generated plain-language summaries | AI-generated summaries significantly improved readability of complex consent forms from ClinicalTrials.gov [38] | Over 80% of surveyed participants reported enhanced understanding of a clinical trial [38] |

| Impact of Digital Health on Patient-Provider Relationship (Systematic Review) [39] | Broad digital health technologies (telemedicine, apps) | Digital tools can empower patients and promote more equitable relationships, but poor implementation risks depersonalization [39] | Maintaining trust requires transparent implementation and reliable technology that supports, rather than replaces, the therapeutic relationship [39] |

Detailed Experimental Protocols

Protocol for Implementing a Multimodal Electronic Informed Consent (eIC) Platform

This protocol is adapted from the successful multicountry evaluation by Fons-Martinez et al. (2025) [36].

1. Objective: To enhance participant comprehension and satisfaction during the informed consent process for a clinical trial by implementing a co-designed, multimodal electronic consent platform.

2. Materials and Reagents:

- Dedicated Web Platform: A secure, HIPAA/GDPR-compliant website or portal to host consent materials.

- Content Authoring Tools: Video recording/editing software (e.g., Adobe Premiere Pro, Camtasia), graphic design software (e.g., Adobe Illustrator, Canva), and web development tools.

- Multimedia Assets: As outlined in Table 1, including:

- Layered web pages with expandable sections.

- Narrative or Q&A-style videos.

- Customized infographics.

- Printable, simplified PDF documents.

- Comprehension Assessment Tool: A validated questionnaire, such as an adapted version of the Quality of Informed Consent (QuIC) questionnaire [36].

3. Methodology:

Step 1: Co-Creation and Content Development

- Convene a Multidisciplinary Team: Include clinical trial physicians, study coordinators, epidemiologists, communication specialists, and a patient engagement lead.

- Conduct Participatory Design Sessions: Organize separate focus groups or design thinking sessions with representatives from the target participant population (e.g., minors, pregnant women, older adults) [36].

- Activity: Use mock consent forms to gather feedback on confusing terminology, desired information hierarchy, and preferred media formats.

- Develop Multimedia Content: Based on feedback, create the suite of consent materials. Ensure all content is accurate and consistent.

- Videos: For minors, use a storytelling format; for other groups, consider question-and-answer simulations with clinicians [36].

- Infographics: Visually summarize key study procedures, risks, benefits, and participant rights.

- Layered Web Content: Present information in tiers, allowing users to click on complex terms (e.g., "randomization") for simple, pop-up definitions.

Step 2: Platform Integration and Testing

- Host all consent materials on the dedicated web platform. Ensure the interface allows users to switch freely between formats (text, video, graphics).

- Conduct usability testing with a small group from the target population to identify navigational or technical issues.

- Perform an accessibility audit using tools like WAVE or axe DevTools to ensure compliance with WCAG 2.2 guidelines, particularly for color contrast and screen reader compatibility [40].

Step 3: Implementation and Data Collection

- During the consent process, provide participants with access to the eIC platform before the formal consent discussion.

- After participants have reviewed the materials, administer the comprehension assessment (QuIC).

- Part A (Objective Comprehension): Multiple-choice or true/false questions covering key aspects of the trial (purpose, procedures, risks, benefits, alternatives, voluntary participation). Score and categorize as low (<70%), moderate (70-80%), adequate (80-90%), or high (≥90%) [36].

- Part B (Subjective Comprehension): Use a 5-point Likert scale for participants to self-rate their understanding.

- Administer a satisfaction survey using Likert scales to gauge the acceptability and perceived usefulness of the different multimedia formats.

Step 4: Data Analysis

- Use descriptive statistics (means, standard deviations, percentages) to summarize comprehension scores and satisfaction rates.

- Apply multivariable regression models to identify demographic predictors (e.g., age, gender, education, prior trial experience) of comprehension scores [36].

Protocol for AI-Assisted Simplification of Informed Consent Forms

This protocol is based on the research into Large Language Models (LLMs) for enhancing clinical trial education [38].

1. Objective: To improve the readability and patient understanding of complex informed consent forms (ICFs) using an LLM-driven summarization approach.

2. Materials and Reagents:

- Source Document: The original, technically complex ICF from a registry like ClinicalTrials.gov.

- Large Language Model: Access to a state-of-the-art LLM such as GPT-4 via an API.

- Prompt Engineering Framework: A standardized set of instructions for the LLM.

3. Methodology:

Step 1: Sequential Summarization Workflow

- Input: Feed the original ICF into the LLM.

- Step 1 - Extraction: Prompt the LLM to identify and extract key sections: Study Objectives, Procedures, Risks & Discomforts, Potential Benefits, Costs & Compensation, Alternatives to Participation.

- Step 2 - Restructuring: For each extracted section, prompt the LLM to restructure the information into a clear, logical flow using short sentences and paragraphs.

- Step 3 - Simplification: Prompt the LLM to replace complex medical jargon with plain, everyday language. Instruct it to define unavoidable technical terms in simple language upon first use.

- Output: Generate a final, consolidated plain-language summary.

Step 2: Quality Control and Validation

- Human Review: A clinical expert and a health literacy expert must review the AI-generated summary for factual accuracy and completeness.

- Readability Assessment: Calculate the readability score of both the original ICF and the AI-generated summary using validated indices (e.g., Flesch-Kincaid Grade Level). The target should be a 6th-8th grade reading level.

- Pilot Testing: Test the summary with a small group of potential participants, using the comprehension assessment method from Protocol 3.1, and iterate based on feedback.

Visualization of Workflows

Multimedia Electronic Consent (eIC) Implementation Workflow

AI-Assisted Consent Simplification Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Materials for Interactive Consent Research

| Item / Solution | Function / Application in Consent Research |

|---|---|

| Validated Comprehension Questionnaire (e.g., QuIC) | Provides a standardized, quantitative metric to objectively assess participant understanding of key trial concepts before and after an intervention [36]. |

| Multimedia Authoring Software | Enables the creation of engaging consent materials such as explainer videos, interactive diagrams, and infographics that cater to diverse learning styles [36]. |

| Secure Web Portal/Hosting Platform | Serves as the delivery mechanism for electronic consent (eIC) materials, ensuring accessibility across devices while maintaining data security and privacy [36]. |

| Large Language Model (LLM) API (e.g., GPT-4) | Used to automate the simplification of complex trial information into plain language summaries, improving baseline readability and accessibility [38]. |

| Accessibility Testing Tools (e.g., WAVE, Colour Contrast Analyser) | Critical for verifying that digital consent materials meet WCAG guidelines, particularly for color contrast, ensuring they are usable by individuals with visual impairments [40] [41]. |

| Participatory Design Framework | A structured methodology for involving patients and the public in the design of consent materials, ensuring the end product is relevant, clear, and user-friendly [36]. |

Within the broader context of assessing patient comprehension in informed consent research, the development of health literacy-appropriate consent forms presents a critical challenge and opportunity. Despite regulatory requirements for informed consent, studies consistently reveal significant comprehension gaps among research participants. A meta-analysis of 103 studies indicated that between 25% to 47% of clinical trial participants did not fully understand the implications of their participation, including its voluntary nature [42]. Only approximately half of participants understood fundamental trial concepts such as randomization or the role of placebos [42]. These comprehension deficits undermine the ethical foundation of informed consent and can impact trial enrollment and retention.

The 2018 revision to the U.S. Federal Common Rule responded to these challenges by mandating that consent forms "begin with a concise and focused presentation of the key information" most likely to assist prospective participants in understanding reasons for or against participation [43]. This regulatory shift emphasizes comprehension as the central goal of informed consent, moving beyond mere regulatory compliance toward meaningful participant understanding. This Application Note provides evidence-based design principles and implementation strategies to operationalize this mandate through health literacy-appropriate consent forms, with a specific focus on assessing and enhancing participant comprehension.

Design Principles for Health Literacy-Appropriate Consent

Foundational Pillars of Effective Consent