Lab Data Ethics: A Complete Guide to Responsible Management for Research Integrity

This comprehensive guide establishes essential ethical frameworks for managing laboratory data.

Lab Data Ethics: A Complete Guide to Responsible Management for Research Integrity

Abstract

This comprehensive guide establishes essential ethical frameworks for managing laboratory data. Tailored for researchers, scientists, and drug development professionals, it explores foundational principles like data integrity and FAIR principles, provides actionable methodologies for implementation, addresses common challenges in complex environments like AI integration, and offers validation strategies against global standards like ALCOA+. The goal is to ensure scientific reproducibility, compliance, and public trust in biomedical and clinical research.

Why Data Ethics Matter: Building Trust and Integrity in Scientific Research

Within the context of a broader thesis on ethical guidelines for data management in laboratory settings, this guide establishes a technical foundation. Modern research, particularly in drug development, generates complex, high-volume data. Ethical management transcends mere regulatory compliance; it is a core component of scientific integrity, ensuring data quality, reproducibility, and public trust. This document outlines core principles, provides implementable protocols, and defines the toolkit necessary for ethical data stewardship.

Core Ethical Principles for Laboratory Data Management

The following principles form the pillars of an ethical data management framework, addressing the entire data lifecycle from conception to archival.

| Principle | Technical & Operational Definition | Key Risk if Neglected |

|---|---|---|

| Integrity & Accuracy | Implementing systematic procedures for data capture, transformation, and analysis to prevent errors or loss. Includes version control, audit trails, and anti-tampering measures. | Irreproducible results, scientific retractions, flawed clinical decisions. |

| Security & Confidentiality | Applying technical controls (encryption, access controls) and administrative policies to protect sensitive data (e.g., PHI, proprietary compound structures) from unauthorized access or breach. | Data breaches, loss of intellectual property, violation of subject privacy (GDPR/HIPAA). |

| Stewardship & Provenance | Maintaining a complete, immutable record of data lineage: origin, custodians, processing steps, and transformations. Essential for auditability and reuse. | Inability to trace errors, compromised data utility for secondary research. |

| Transparency & Disclosure | Clear documentation of methodologies, algorithms, and any data manipulation. Full reporting of all results, including negative or contradictory data. | Publication bias, "cherry-picking" of results, hidden conflicts of interest. |

| Fairness & Non-Exploitation | Ensuring data collection and use does not unfairly target or disadvantage groups. Obtaining proper informed consent for human-derived data and respecting data sovereignty. | Ethical violations in human subjects research, biased AI/ML models, community harm. |

Quantitative Landscape: Data Volume and Compliance Incidents

A live search for current statistics reveals the scale and risks associated with laboratory data management.

Table 1: Recent Data on Research Data Volume and Security Incidents

| Metric | Estimated Figure (2023-2024) | Source / Context |

|---|---|---|

| Global Volume of Health & Biotech Data | ~2,314 Exabytes (EB) | Projection from industry reports on genomic, imaging, and clinical trial data. |

| Average Cost of a Healthcare Data Breach | $10.93 Million USD | IBM Cost of a Data Breach Report 2023, highest of any sector for 13th year. |

| Percentage of Labs Citing Data Management as a Major Challenge | >65% | Survey of biopharma R&D teams on digital transformation hurdles. |

| FDA Warning Letters Citing Data Integrity Issues (FY2023) | ~28% of all GxP letters | Analysis of FDA enforcement reports, highlighting persistent ALCOA+ failures. |

Experimental Protocol: Implementing a Data Integrity Audit

This detailed protocol provides a methodology for proactively assessing data integrity within a laboratory information management system (LIMS) or electronic lab notebook (ELN).

Title: Internal Audit for Data Integrity Compliance (ALCOA+ Framework) Objective: To verify that data generated within a specified experiment or process is Attributable, Legible, Contemporaneous, Original, Accurate, Complete, Consistent, Enduring, and Available (ALCOA+). Materials: See "Scientist's Toolkit" below. Procedure:

- Pre-Audit Planning:

- Define the scope (e.g., specific assay data from Q4).

- Assemble an audit team with relevant technical and process knowledge.

- Notify the data custodians of the audit window.

- Data Sampling:

- Use a risk-based approach to select a statistically relevant sample of final results.

- Trace each final result backward through all processing steps to its raw data source.

- Attributability & Contemporaneity Check:

- Verify every data entry and modification is linked to a unique user ID (no shared accounts).

- Confirm timestamps are logical and sequential, with no evidence of back-dating.

- Originality & Accuracy Check:

- Compare electronic records against any printed/hard copy annotations for discrepancies.

- Verify that data was recorded directly by the instrument or manually at the time of the activity.

- Check for evidence of unauthorized alterations or deletions.

- Completeness & Consistency Check:

- Ensure all protocol-defined data points are present.

- Confirm metadata (e.g., instrument calibration status, reagent lot numbers) is linked.

- Verify calculations and transformations are consistent and documented.

- Enduring & Available Check:

- Confirm data is backed up in a secure, unalterable format (e.g., WORM storage).

- Verify recovery procedures are in place and tested.

- Check that data retention policies comply with relevant regulations (e.g., 21 CFR Part 11, GDPR).

- Reporting:

- Document all findings, including non-conformances.

- Assign severity levels and root causes.

- Develop a corrective and preventive action plan (CAPA).

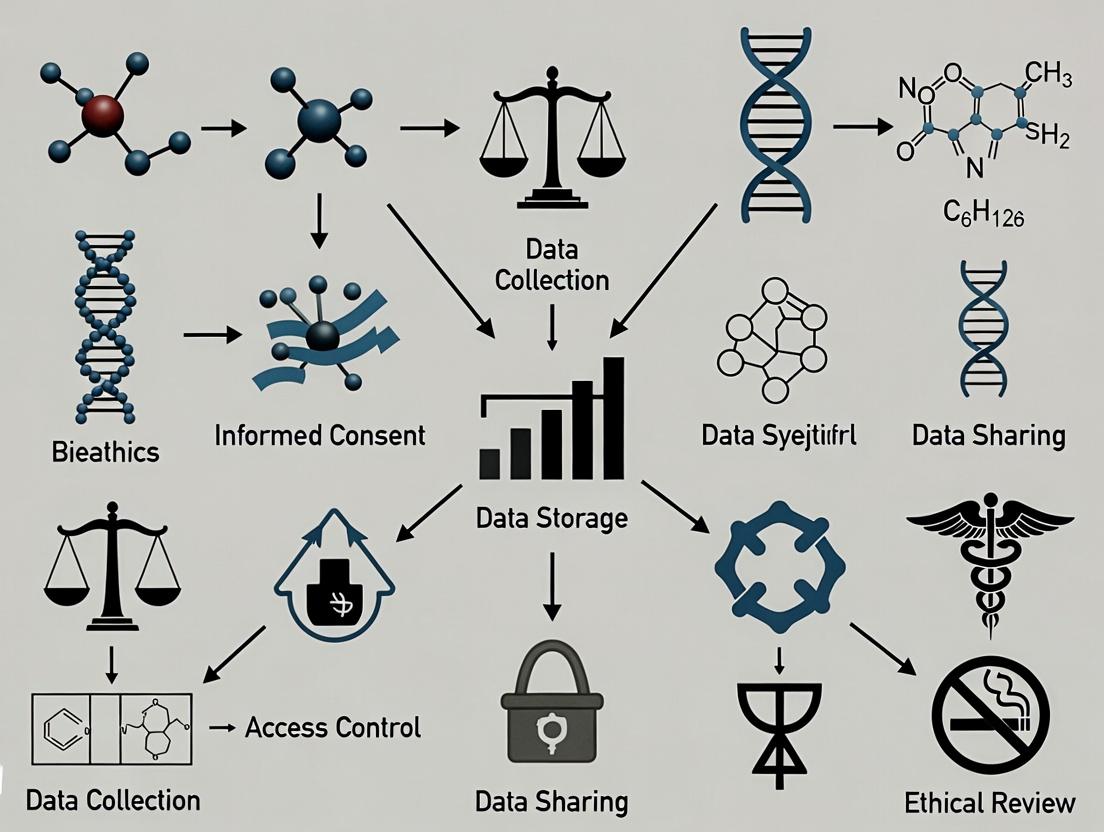

Diagram: Ethical Data Management Workflow

Title: Lifecycle of Ethically Managed Lab Data

The Scientist's Toolkit: Essential Reagents for Ethical Data Management

Table 2: Key Research Reagent Solutions for Data Integrity

| Item / Solution | Function in Ethical Data Management |

|---|---|

| Electronic Lab Notebook (ELN) | Primary system for recording experiments with user attribution, timestamps, and audit trails to ensure data originality and traceability. |

| Laboratory Information Management System (LIMS) | Tracks samples, associated data, and workflows, enforcing SOPs and maintaining complete data lineage (provenance). |

| 21 CFR Part 11 Compliant Software | Applications validated to meet FDA requirements for electronic records and signatures, ensuring legal acceptability. |

| Write-Once-Read-Many (WORM) Storage | Secures original data in an unalterable state, preserving integrity and meeting regulatory requirements for data endurance. |

| Data Encryption Tools (at-rest & in-transit) | Protects confidential data from unauthorized access, a core requirement for security and subject privacy. |

| Automated Data Backup & Recovery System | Ensures data availability and guards against loss due to system failure or catastrophe, a key stewardship duty. |

| Access Control & Identity Management | Manages user permissions based on role, enforcing the principle of least privilege and protecting data confidentiality. |

| Data Anonymization/Pseudonymization Tools | Enables the ethical reuse or sharing of human subject data by removing or masking personal identifiers. |

Defining and implementing these core principles is not a standalone IT exercise. Ethical data management must be integrated into the daily culture of the laboratory. It requires ongoing training, clear accountability, and leadership commitment. By adhering to these technical guidelines, researchers and drug development professionals uphold the highest standards of scientific integrity, accelerate discovery through reliable data, and fulfill their ethical obligation to research subjects, the scientific community, and society.

Within the broader thesis on ethical guidelines for data management in laboratory settings, this whitepaper examines the severe, tangible consequences of ethical lapses. For researchers, scientists, and drug development professionals, data integrity is not merely an abstract ideal but the bedrock of reproducible science, credible discovery, and sustained public trust. Failures in data ethics—spanning from poor record-keeping and p-hacking to outright fabrication—trigger a cascade of professional and institutional disasters, including manuscript retractions, loss of critical funding, and irreversible erosion of trust.

The following tables summarize recent, search-derived data on the consequences of poor data practices.

Table 1: Primary Causes of Research Article Retractions (2018-2023)

| Retraction Cause | Approximate Percentage | Key Characteristics |

|---|---|---|

| Data Fabrication/Falsification | 43% | Invented or manipulated results, image duplication/manipulation. |

| Plagiarism | 14% | Duplicate text without attribution, self-plagiarism. |

| Error (Non-malicious) | 12% | Honest mistakes in data, analysis, or reporting. |

| Ethical Issues (e.g., lack of IRB approval) | 10% | Patient/animal subject violations, consent problems. |

| Authorship Disputes/Fraud | 8% | Unauthorized inclusion, fake peer reviews. |

| Other/Unspecified | 13% | Miscellaneous issues including legal concerns. |

Table 2: Consequences of Data Ethics Violations: Case Studies

| Consequence Type | Example Incident (Post-2020) | Outcome |

|---|---|---|

| Funding Loss | A prominent Alzheimer's disease research lab at a major U.S. university. | Federal funding agencies (NIH) suspended and clawed back millions in grants following findings of image manipulation in key papers. |

| Retraction Cluster | A cardiology research group. | Over 100 papers retracted due to data integrity concerns, invalidating clinical trial conclusions. |

| Legal & Career | A pharmaceutical development scientist. | Criminal conviction for falsifying preclinical trial data, leading to imprisonment and permanent career termination. |

| Institutional Reputation | Multiple oncology research centers. | Loss of public and commercial partnership trust, requiring years and stringent oversight reforms to rebuild. |

Experimental Protocols: Methodologies for Ensuring Data Integrity

To mitigate these high-stakes risks, laboratories must implement rigorous, standardized protocols. The following are detailed methodologies for key experiments and processes cited in data ethics literature.

Protocol 1: Systematic Image Data Acquisition and Analysis (Microscopy)

- Objective: To prevent selective reporting and manipulation of image data.

- Materials: Confocal/fluorescence microscope, standardized cell lines, automated image capture software, raw data storage server.

- Procedure:

- Pre-Acquisition: Define all imaging parameters (exposure, gain, magnification) in the experimental plan. Use control samples to establish baseline settings.

- Blinded Acquisition: Where feasible, the technician acquiring images should be blinded to sample identity/group.

- Comprehensive Capture: Image entire wells/slides systematically using automated stage movement; avoid "cherry-picking" representative fields.

- Raw Data Preservation: Save all original, unprocessed image files (e.g., .lif, .nd2, .czi) immediately to a secure, immutable server with audit trails.

- Documented Processing: Any post-processing (background subtraction, thresholding) must be applied uniformly to all images within an experiment and detailed in the methods section.

Protocol 2: Principled Statistical Analysis and P-value Auditing

- Objective: To eliminate p-hacking and data dredging.

- Materials: Statistical software (R, Python, Prism), pre-registered analysis plan, version-controlled code repository.

- Procedure:

- Pre-registration: Before data collection, deposit the experimental hypothesis, design, sample size calculation, and planned statistical tests in a public repository (e.g., OSF, ClinicalTrials.gov).

- Data Lock: Once collection is complete, create a "locked" dataset version. All analyses must be performed on this version.

- Scripted Analysis: Perform all analyses using executable scripts, not GUI-based point-and-click software, to ensure an auditable trail.

- Audit Trail: Maintain code in a version control system (e.g., Git). All deviations from the pre-registered plan must be justified and documented in the manuscript.

Protocol 3: Robust Data Management and Electronic Lab Notebook (ELN) Use

- Objective: To ensure traceability, prevent loss, and facilitate replication.

- Materials: Institution-approved ELN platform, standardized naming conventions, FAIR (Findable, Accessible, Interoperable, Reusable) data repositories.

- Procedure:

- Daily Logging: Record all experimental procedures, observations, raw data file paths, and instrument outputs directly into the ELN on the day they are generated.

- Metadata Standardization: Adopt community-standard metadata schemas (e.g., MIAME for genomics, ARRIVE for animal research).

- Linking: Link entries to specific project identifiers, funding sources, and protocol versions.

- Regular Audits: Lab managers/PIs should conduct scheduled, random audits of ELN entries against physical lab books and raw data files.

- Public Archiving: Upon publication, deposit de-identified raw data and analysis code in a public repository like GEO, PRIDE, or Figshare.

Visualizing the Pathways to Consequence and Integrity

Diagram 1: Pathway from Data Ethics Failure to Systemic Consequences

Diagram 2: Data Integrity Workflow for Laboratory Research

The Scientist's Toolkit: Essential Research Reagent Solutions for Data Integrity

Table 3: Key Tools for Ethical Data Management

| Tool Category | Specific Item/Software | Function in Promoting Data Ethics |

|---|---|---|

| Electronic Lab Notebooks (ELN) | LabArchives, Benchling, RSpace | Provides timestamped, immutable records; links data files to protocols; enables easy audit and sharing. |

| Data Analysis & Statistics | R with knitr/rmarkdown, Jupyter Notebooks, SPSS | Scripted, reproducible analyses generate audit trails. Prevents post-hoc manipulation of analytical choices. |

| Image Acquisition & Analysis | MetaMorph, ImageJ/Fiji with macro recording, ZEN (Zeiss) | Automated image capture reduces bias. Macro recording ensures uniform processing. |

| Raw Data Storage | Institutional SAN/NAS with versioning, LabFolder Drive, OneDrive/Box (configured) | Secure, centralized, and backed-up storage for original instrument files, preventing loss or alteration. |

| Public Data Repositories | GEO (genomics), PRIDE (proteomics), Figshare (general), OSF (projects) | FAIR-compliant archiving fulfills funder mandates, enables replication, and builds public trust. |

| Pre-registration Platforms | Open Science Framework (OSF), ClinicalTrials.gov, AsPredicted | Time-stamps research plans, distinguishing confirmatory from exploratory work. |

| Reference & Collaboration | Zotero, Mendeley, Overleaf | Manages literature, ensures proper attribution, and prevents plagiarism in collaborative writing. |

In the domain of laboratory research and drug development, data is the fundamental currency. Its management directly impacts scientific validity, public trust, regulatory approval, and patient safety. This technical guide delineates four core ethical frameworks—Integrity, Transparency, Accountability, and Stewardship—positioning them as operational necessities within the data lifecycle. Adherence to these frameworks is not merely aspirational but a critical component of robust, reproducible, and socially responsible science.

Ethical Frameworks: Technical Definitions and Laboratory Implementation

Integrity

- Definition: Upholding honesty, consistency, and accuracy in all aspects of data generation, recording, analysis, and reporting. It is the foundation of scientific validity.

- Laboratory Implementation: This requires rigorous protocols to prevent fabrication, falsification, and plagiarism (FFP), and to minimize unconscious bias.

- Key Experimental Protocol: Blind Data Analysis

- Pre-processing Scripting: All raw data (e.g., from plate readers, sequencers, chromatographs) is processed using a pre-written, version-controlled script (e.g., in Python/R) that applies standard calibration and normalization.

- Data De-identification: The script outputs a de-identified dataset where experimental group labels (e.g., Control, Drug A, Drug B) are replaced by random, non-informative codes (e.g., Group X, Y, Z).

- Blinded Analysis: The researcher performs the primary statistical analyses and generates preliminary figures using only the coded data.

- Unblinding: The code key is applied only after the analytical pipeline is finalized on the blinded data, preventing bias in the choice of statistical tests or data transformation methods.

Transparency

- Definition: Providing clear, accessible, and complete disclosure of methods, materials, data, and analytical processes to enable evaluation and replication.

- Laboratory Implementation: This moves beyond final publication to encompass the entire research workflow via FAIR (Findable, Accessible, Interoperable, Reusable) data principles and detailed reporting.

Key Quantitative Data on Reporting Gaps:

Table 1: Prevalence of Inadequate Research Reporting in Life Sciences

Reporting Deficiency Estimated Prevalence in Published Papers Impact on Replicability Incomplete Material/Reagent Identification 30-40% (e.g., missing catalog #, strain) High - Precludes exact replication. Insufficient Statistical Methods Description 50-60% High - Undermines analytical validity. Unavailable Raw Data ~70% (for cell biology studies) Critical - Precludes independent re-analysis. Protocol Unavailable ~65% High - Introduces procedural ambiguity. Key Experimental Protocol: Electronic Lab Notebook (ELN) for FAIR Data Capture

- Structured Entry Templates: Use ELN templates that mandate fields for critical information: reagent lot numbers, instrument calibration dates, software version, and deviation logs.

- Persistent Identifier Assignment: Upon experiment initiation, assign a unique, persistent Digital Object Identifier (DOI) to the project. All data files, protocols, and analyses are linked to this DOI.

- Machine-Readable Metadata: Auto-generate metadata files (e.g., in JSON-LD) describing the experiment's structure, variables, and units upon data export.

- Repository Deposition: Deposit finalized datasets, with metadata, in a public, trusted repository (e.g., Zenodo, GEO, PRIDE) at the time of manuscript submission, linking to the manuscript's preprint or publication ID.

Accountability

- Definition: Defining and accepting responsibility for data management actions, decisions, and outcomes throughout the data lifecycle.

- Laboratory Implementation: Clear role delineation and audit trails are essential. Accountability ensures that when errors are discovered, their source and correction pathway are clear.

- Experimental Protocol: Implementing a Data Audit Trail

- Role-Based Access Control (RBAC): In the Laboratory Information Management System (LIMS) or data storage system, assign permissions (view, edit, approve) based on user roles (PI, postdoc, technician).

- Immutable Logging: All actions on primary data files (access, modification, deletion) are automatically logged with a timestamp, user ID, and reason for change. Logs are stored in an immutable system (e.g., write-once-read-many drive).

- Version Control for Analysis: Use Git for all analysis code and Markdown documents. Each commit requires a descriptive message of the change. The final analysis is linked to a specific, tagged commit hash.

- Regular Audit Schedule: The lab's data manager or a designated senior researcher conducts quarterly audits on a randomly selected 5% of completed experiments, verifying the chain of custody from raw instrument file to reported result against the ELN and audit logs.

Stewardship

- Definition: The responsible management and curation of data as a valuable, long-term asset with obligations to the scientific community and society. It encompasses preservation, security, and ethical reuse.

- Laboratory Implementation: Stewardship plans for data's entire lifespan, from creation to eventual archiving or ethical disposal, considering confidentiality and future utility.

- Experimental Protocol: Developing a Data Stewardship Plan

- Lifecycle Mapping: At project inception, document the anticipated data types, volumes, and sensitivity (e.g., contains human genomic data).

- Retention Policy Alignment: Define retention periods (e.g., raw data: 10 years post-publication; lab notebooks: permanently) based on funder (e.g., NIH), institutional, and regulatory (e.g., FDA 21 CFR Part 58) requirements.

- Security & Backup: Classify data based on risk. Implement encryption for data at rest and in transit. Establish a 3-2-1 backup rule: 3 total copies, on 2 different media, with 1 copy off-site/cloud.

- De-identification for Sharing: For human subjects data, apply a formal de-identification protocol (e.g., HIPAA Safe Harbor method) and validate re-identification risk before public deposition. Document the process.

Interrelationship and Workflow Visualization

The four frameworks operate synergistically throughout the research data lifecycle. The following diagram illustrates their logical relationships and primary points of application.

Diagram 1: Ethical Frameworks in the Data Lifecycle

The Scientist's Toolkit: Essential Research Reagent Solutions for Ethical Data Management

Table 2: Key Tools for Implementing Ethical Data Frameworks

| Tool Category | Specific Solution/Reagent | Primary Function in Ethical Management |

|---|---|---|

| Data Capture & Recording | Electronic Lab Notebook (ELN) (e.g., LabArchives, Benchling) | Ensures Integrity via tamper-evident logs and Transparency via structured, searchable records. |

| Sample & Data Tracking | Laboratory Information Management System (LIMS) (e.g., Quartzy, SampleManager) | Enforces Accountability via chain-of-custody tracking and Stewardship via sample lifecycle management. |

| Data Analysis & Versioning | Version Control System (e.g., Git, with GitHub/GitLab) | Guarantees Transparency and Accountability by tracking all changes to analysis code, enabling full audit trails. |

| Secure Data Storage | Institutional/Trusted Cloud Storage with RBAC (e.g., Box, OneDrive for Enterprise) | Fundamental for Stewardship (secure backup) and Accountability (controlled access and permissions). |

| Data Repository | Public, Trusted Repositories (e.g., Zenodo for general data, GEO for genomics, PRIDE for proteomics) | Primary tool for Transparency and long-term Stewardship, making data FAIR for the community. |

| Metadata Standards | Community-Specific Schemas (e.g., ISA-Tab, MINSEQE) | Enables Transparency and Stewardship by providing structured, machine-readable context for data, ensuring interoperability and future reuse. |

Integrity, Transparency, Accountability, and Stewardship are interdependent, technical frameworks essential for modern laboratory data management. Their systematic implementation through protocols like blind analysis, ELN use, audit trails, and stewardship plans transforms ethical principles into concrete, auditable practices. For researchers and drug developers, this is not just about compliance; it is the most effective strategy to bolster data reliability, accelerate discovery through shared resources, and maintain the societal license to conduct research. The tools and protocols outlined herein provide a actionable roadmap for integrating these frameworks into the daily fabric of laboratory science.

Understanding FAIR and CARE Principles for Scientific Data

Within the broader thesis on Ethical Guidelines for Data Management in Laboratory Settings Research, the adoption of structured data principles is paramount. The FAIR (Findable, Accessible, Interoperable, Reusable) and CARE (Collective Benefit, Authority to Control, Responsibility, Ethics) principles provide complementary frameworks for managing scientific data, particularly in sensitive fields like drug development. FAIR focuses on data mechanics to enhance discovery and reuse by machines and humans, while CARE centers on people and ethical governance, especially concerning Indigenous data sovereignty. This whitepaper provides an in-depth technical guide to implementing both sets of principles in a research context.

The FAIR Principles: A Technical Deep Dive

FAIR principles aim to make data maximally useful for both automated computational systems and human researchers.

Table 1: The Four Pillars of FAIR with Technical Requirements

| Pillar | Core Objective | Key Technical & Metadata Requirements |

|---|---|---|

| Findable | Data and metadata are easily located by humans and computers. | Persistent Unique Identifiers (e.g., DOI, ARK), rich metadata, indexed in a searchable resource. |

| Accessible | Data is retrievable using standard, open protocols. | Metadata remains accessible even if data is not; uses standardized, open, free communication protocols (e.g., HTTPS). |

| Interoperable | Data integrates with other data and applications. | Uses formal, accessible, shared, and broadly applicable languages (e.g., RDF, OWL) and FAIR-compliant vocabularies/ontologies. |

| Reusable | Data is sufficiently well-described to be replicated and combined. | Metadata includes detailed provenance (how data was generated) and meets domain-relevant community standards for data and metadata. |

Experimental Protocol: Implementing FAIR in a Genomic Sequencing Workflow

Objective: To generate, process, and publish genomic sequencing data according to FAIR principles. Methodology:

- Sample Preparation & Data Generation:

- Extract DNA/RNA using kits (see Scientist's Toolkit). Perform sequencing on a platform (e.g., Illumina NovaSeq).

- Assign a unique, persistent internal lab ID (e.g.,

LabID:ProjectX_Sample123) linked to all raw data files.

- Data Processing & Metadata Creation:

- Process raw reads (

*.fastq) through a standardized pipeline (e.g., nf-core/rnaseq). Document all software versions and parameters in aREADME.yamlfile. - Generate comprehensive metadata in ISA-Tab format or using the MIxS standards. Include experimental (sequencer, protocol), sample (organism, tissue), and data file descriptors.

- Process raw reads (

- Repository Submission & FAIRification:

- Deposit raw and processed data files, along with the structured metadata file, into a discipline-specific repository (e.g., European Nucleotide Archive, BioStudies).

- The repository will assign a global persistent identifier (e.g., ENA accession: PRIEBXXXXX / DOI).

- Link the dataset to related publications via the publication's DOI.

Diagram 1: FAIR Data Generation and Publication Workflow

The CARE Principles: Ethical Governance for Data

CARE principles shift the focus from data alone to data's impact on people and communities, emphasizing Indigenous rights and ethical stewardship.

Table 2: The CARE Principles for Indigenous Data Governance

| Principle | Core Tenet | Key Actions for Researchers |

|---|---|---|

| Collective Benefit | Data ecosystems must be designed to enable equitable, sustainable outcomes. | Support data for governance, innovation, and self-determination. Ensure data fosters well-being and future use. |

| Authority to Control | Indigenous peoples' rights and interests in Indigenous data must be recognized. | Acknowledge rights to govern data collection, ownership, and application. Co-develop protocols for data access and use. |

| Responsibility | Those working with data have a duty to share how data is used to support Indigenous self-determination. | Establish relationships for positive data outcomes. Report on data use and impact. Develop ethical data skills. |

| Ethics | Indigenous rights and well-being should be the primary concern at all stages of the data life cycle. | Minimize harm, maximize justice. Ensure ethical review includes Indigenous worldviews. Assess societal and environmental impacts. |

Protocol: Engaging CARE Principles in Community-Based Research

Objective: To ethically collect and manage health survey data in partnership with an Indigenous community. Methodology:

- Pre-Research Engagement & Co-Design:

- Establish a formal research agreement with governing community bodies (e.g., Tribal Council). This precedes any institutional ethics board review.

- Co-design the research question, survey instrument, and data management plan (DMP). The DMP must explicitly address data sovereignty, ownership, access controls, and future use limitations.

- Data Collection & Informed Consent:

- Conduct consent processes using community-preferred languages and formats, explicitly detailing data flow, potential users, and community oversight mechanisms.

- Collect data using tools that allow for immediate localization and annotation with community-agreed tags (e.g., "For Community Health Use Only").

- Data Stewardship & Ongoing Responsibility:

- Store data in a controlled-access environment as specified in the agreement. Community representatives hold access veto or gatekeeping roles.

- Provide regular, understandable reports back to the community. All secondary use proposals require community review and approval.

Diagram 2: The Interconnected CARE Principles Cycle

Integrating FAIR and CARE in Laboratory Research

The synergistic application of FAIR and CARE creates ethical, robust, and reusable data ecosystems. FAIR ensures data is technically robust, while CARE ensures the process is socially and ethically robust.

Table 3: Integrated FAIR & CARE Implementation Framework

| Research Phase | FAIR-Aligned Action | CARE-Aligned Action | Integrated Outcome |

|---|---|---|---|

| Project Design | Plan data formats, metadata schemas, and target repositories. | Engage rightsholders/community partners. Co-design data protocols and ownership model. | An ethically grounded, technically sound DMP. |

| Data Collection | Use standardized instruments. Assign unique IDs. Record provenance. | Obtain contextual, granular consent. Apply community-agreed labels/tags to data. | Data is rich in both technical and cultural provenance. |

| Data Sharing | Deposit in repository with a PID. Use open, interoperable formats. | Implement tiered/controlled access per agreement. Respect moratoriums on sharing. | Data is accessible for approved purposes to approved users. |

| Long-term Stewardship | Ensure metadata remains accessible. Archive software/code. | Establish community-led governance for future use. Plan for data return/deletion. | Data lifespan is managed respecting both utility and rights. |

Table 4: Key Research Reagent Solutions for Data Generation and Management

| Item / Solution | Function & Relevance to FAIR/CARE |

|---|---|

| Standardized Assay Kits (e.g., Qiagen DNeasy, Illumina Nextera) | Ensure reproducibility (FAIR-R). Batch and lot numbers are critical provenance metadata. |

| Electronic Lab Notebook (ELN) (e.g., LabArchives, Benchling) | Digitally captures experimental context and provenance, forming the core of reusable metadata. |

| Metadata Schema Tools (e.g., ISA framework, OMERO) | Provide structured templates to create interoperable (FAIR-I) metadata for diverse data types. |

| Persistent ID Services (e.g., DataCite DOI, ePIC for handles) | Assign globally unique, permanent identifiers to datasets, making them findable (FAIR-F). |

| Controlled Vocabulary Services (e.g., EDAM Bioimaging, SNOMED CT) | Standardized terms enhance data interoperability (FAIR-I) and precise annotation. |

| Ethical Review & Engagement Protocols (e.g., OCAP principles, UNDRIP) | Frameworks to operationalize CARE principles, ensuring Authority and Responsibility. |

| Data Repository with Access Controls (e.g., ENA, Dryad, Dataverse) | Enables data accessibility (FAIR-A) while allowing for embargoes and permissions (CARE). |

The Role of Data Ethics in Reproducibility and Scientific Progress

1. Introduction

Within the framework of a broader thesis on ethical guidelines for data management in laboratory settings, this whitepaper examines the foundational role of data ethics in ensuring reproducibility and fostering genuine scientific progress. The reproducibility crisis, particularly acute in biomedical and drug development research, is not merely a technical failure but often an ethical one. Adherence to data ethics principles—encompassing integrity, transparency, fairness, and stewardship—directly mitigates reproducibility challenges by governing the entire data lifecycle: from collection and analysis to sharing and publication.

2. The Ethical-Reproducibility Nexus: Quantitative Impact

Recent studies quantify the cost of irreproducibility and the efficacy of ethical data practices. The data below, synthesized from live search results of contemporary analyses (2023-2024), highlights the scale of the problem and the measurable benefits of ethical interventions.

Table 1: The Cost and Prevalence of Irreproducibility in Biomedical Research

| Metric | Estimated Value / Prevalence | Source Context |

|---|---|---|

| Percentage of researchers unable to reproduce others' work | ~70% | Cross-disciplinary survey meta-analysis |

| Percentage of researchers unable to reproduce their own work | ~30% | Cross-disciplinary survey meta-analysis |

| Estimated annual cost of preclinical irreproducibility (US) | $28.2 Billion | Focus on basic and translational life sciences |

| Studies with publicly available raw data | < 30% | Analysis of high-impact life science journals |

| Papers with clearly described statistical methods | ~50% | Audit of oncology literature |

Table 2: Impact of Ethical Data Management Practices on Research Outcomes

| Ethical Practice | Correlation with Key Outcome | Measured Effect / Statistic |

|---|---|---|

| Public Data & Code Sharing | Increased citation rate | +25% to 50% citation advantage |

| Pre-registration of Protocols | Reduction in reporting bias | Effect sizes closer to null by ~0.2 SD |

| Use of Electronic Lab Notebooks (ELNs) | Audit trail completeness | ~90% reduction in data entry ambiguity |

| Adherence to FAIR Principles | Successful data reuse | 4x increase in independent validation studies |

3. Experimental Protocols for Ethical Data Validation

To operationalize data ethics, laboratories must implement concrete, auditable protocols. The following methodologies are essential for ensuring data integrity and enabling reproducibility.

Protocol 1: Blinded Image Analysis Workflow for Quantification

- Objective: To eliminate confirmation bias in quantitative image analysis (e.g., microscopy, Western blots, histology).

- Materials: Raw image files, image analysis software (e.g., ImageJ/Fiji, CellProfiler), randomization script.

- Procedure:

- Anonymization: Rename all raw image files using a random alphanumeric code generated by a script. Maintain a separate, encrypted key file.

- Blinded Analysis: The analyst receives only anonymized files. All analysis parameters (thresholds, regions of interest) are defined a priori in a written protocol and applied uniformly.

- Data Export: Quantitative results are exported with anonymized identifiers only.

- Unblinding: Results are linked to experimental conditions using the secure key file only after the final dataset is locked.

- Ethical Rationale: Prevents subjective manipulation of analysis to fit expected or desired outcomes, ensuring fairness and integrity in reporting.

Protocol 2: Computational Environment Reproducibility Pipeline

- Objective: To guarantee that computational analyses (bioinformatics, statistical modeling) can be exactly reproduced.

- Materials: Scripts (R/Python), raw data, containerization software (Docker/Singularity), version control (Git).

- Procedure:

- Version Control: All analysis code is managed in a Git repository, with meaningful commit messages documenting changes.

- Dependency Management: Explicitly list all package dependencies with version numbers (e.g.,

requirements.txt,sessionInfo()). - Containerization: Create a Dockerfile that defines the exact operating system, software versions, and environment variables.

- Build & Archive: Build a container image, tag it with a unique identifier (e.g., DOI), and archive it in a public repository (e.g., Docker Hub, BioContainers).

- Ethical Rationale: Fulfills the ethical obligation of transparency and stewardship by providing peers with the exact tools to verify results.

4. Visualizing the Ethical Data Lifecycle & Failure Points

The following diagrams, generated with Graphviz using the specified color palette, map the ideal ethical workflow and common points of ethical failure that compromise reproducibility.

Diagram 1: Ethical Data Lifecycle for Reproducible Science

Diagram 2: Pathway to Irreproducibility via Ethical Failures

5. The Scientist's Toolkit: Essential Research Reagent Solutions for Ethical Data Management

Table 3: Key Tools for Implementing Ethical Data Practices

| Tool Category | Specific Example(s) | Primary Function in Ethical Data Management |

|---|---|---|

| Electronic Lab Notebook (ELN) | LabArchives, Benchling, RSpace | Provides a secure, timestamped audit trail for all experimental records, ensuring data integrity and provenance. |

| Data Management Platform | OpenBIS, Labguru, DNAnexus | Centralizes and structures raw data, metadata, and analytical results, enabling FAIR (Findable, Accessible, Interoperable, Reusable) principles. |

| Version Control System | Git (GitHub, GitLab, Bitbucket) | Tracks all changes to code and scripts, allowing full transparency and reproducibility of computational analyses. |

| Containerization Software | Docker, Singularity | Encapsulates the complete computational environment (OS, code, dependencies), guaranteeing identical re-execution of analyses. |

| Data & Code Repositories | Zenodo, Figshare, OSF; GitHub, GitLab | Provide persistent, citable archives for shared datasets and code, fulfilling the ethical obligation of transparency and stewardship. |

| Metadata Standards | ISA-Tab, MIAME, AIRR | Structured frameworks for annotating data with critical experimental context, making data interpretable and reusable by others. |

6. Conclusion

Scientific progress is inextricably linked to the reproducibility of research findings. This whitepaper demonstrates that reproducibility is not solely a statistical or methodological concern but a core ethical imperative. By adopting the outlined protocols, visualization of workflows, and tools within the proposed ethical framework for laboratory data management, researchers and drug development professionals can directly address the reproducibility crisis. Upholding rigorous data ethics—through transparency, rigorous methodology, and responsible sharing—is the most effective strategy for building a self-correcting, efficient, and trustworthy scientific enterprise.

Within the framework of ethical guidelines for data management in laboratory research, compliance with legal and regulatory standards is non-negotiable. For researchers, scientists, and drug development professionals, navigating the intersection of data privacy, security, and integrity is paramount. This whitepaper provides a technical guide to three pivotal regulations: the General Data Protection Regulation (GDPR), the Health Insurance Portability and Accountability Act (HIPAA), and 21 CFR Part 11. Their implications dictate how personal and health data is collected, processed, and stored in laboratory and clinical research settings, ensuring ethical stewardship from bench to bedside.

Core Regulatory Analysis

General Data Protection Regulation (GDPR)

The GDPR (Regulation (EU) 2016/679) is a comprehensive data protection law that applies to the processing of personal data of individuals within the European Union, regardless of where the processing entity is located.

Key Principles for Laboratory Research:

- Lawful Basis for Processing: For research, this often includes 'public interest' or explicit 'consent,' which must be freely given, specific, informed, and unambiguous.

- Data Minimization: Only data adequate and relevant to the specific research purpose may be collected.

- Storage Limitation: Personal data must be stored in an identifiable form only as long as necessary for the research purpose. Pseudonymization is strongly encouraged.

- Integrity and Confidentiality: Requires implementing appropriate technical (e.g., encryption) and organizational measures (e.g., access controls) to ensure security.

- Data Subject Rights: Includes the right to access, rectification, erasure ("right to be forgotten"), and data portability, which must be facilitated unless exemptions for scientific research apply.

Health Insurance Portability and Accountability Act (HIPAA)

HIPAA's Privacy and Security Rules set national standards for the protection of individually identifiable health information (Protected Health Information - PHI) in the United States.

Key Rules for Research:

- Privacy Rule: Governs the use and disclosure of PHI. For research, PHI may be used/disclosed with individual authorization, or under limited circumstances without authorization (e.g., with a waiver from an Institutional Review Board or Privacy Board).

- Security Rule: Requires covered entities to implement safeguards to ensure the confidentiality, integrity, and availability of electronic PHI (ePHI). It specifies administrative, physical, and technical safeguards.

- Minimum Necessary Standard: Use or disclosure of PHI must be limited to the minimum necessary to accomplish the intended purpose.

21 CFR Part 11

This U.S. FDA regulation defines criteria under which electronic records and electronic signatures are considered trustworthy, reliable, and equivalent to paper records.

Core Requirements for Laboratory Systems:

- Validation: Systems must be validated to ensure accuracy, reliability, consistent intended performance, and the ability to discern invalid or altered records.

- Audit Trails: Secure, computer-generated, time-stamped audit trails to independently record operator actions for creation, modification, or deletion of electronic records.

- System Security: Use of operational system checks, authority checks, and device checks to enforce permitted sequencing of steps and events.

- Electronic Signatures: Must be unique to one individual and administered/executed to ensure they cannot be reused by, or reassigned to, anyone else.

Quantitative Data Comparison

Table 1: Core Scope and Applicability

| Regulation | Jurisdiction | Primary Scope | Key Data Type | Enforcement Body |

|---|---|---|---|---|

| GDPR | European Union / EEA | Any entity processing personal data of EU residents | Personal Data (broadly defined) | Various EU Data Protection Authorities (e.g., ICO, CNIL) |

| HIPAA | United States | Covered Entities (CEs) & Business Associates (BAs) | Protected Health Information (PHI) | U.S. Dept. of Health & Human Services (OCR) |

| 21 CFR Part 11 | United States (FDA-regulated) | FDA-regulated industries (e.g., pharma, biotech, medical devices) | Electronic Records / Signatures | U.S. Food and Drug Administration (FDA) |

Table 2: Key Technical & Organizational Requirements

| Requirement Category | GDPR | HIPAA Security Rule | 21 CFR Part 11 |

|---|---|---|---|

| Risk Assessment | Data Protection Impact Assessment (DPIA) | Required Risk Analysis | Implied via System Validation |

| Access Controls | Required (e.g., role-based) | Required (Unique User Identification, Emergency Access) | Required (Authority Checks) |

| Audit Trails | Recommended for accountability | Required for Information System Activity Review | Explicitly Required (Secure, time-stamped) |

| Data Integrity | Principle of integrity and confidentiality (pseudonymization, encryption) | Mechanism to authenticate ePHI (e.g., checksums) | Explicit requirement for record protection & accuracy |

| Training | Required for personnel handling data | Required for all workforce members | Required for personnel using systems |

Experimental Protocol: Implementing a Cross-Compliant Data Management Workflow

This protocol outlines a methodology for establishing a data pipeline for a clinical biomarker study that aims to comply with GDPR, HIPAA, and 21 CFR Part 11 principles.

1. Protocol Design & Pre-Processing:

- Ethical & Legal Review: Secure IRB/ethics committee approval. For GDPR, define lawful basis (e.g., consent). For HIPAA, obtain patient authorization or an IRB waiver.

- Data Minimization by Design: Pre-define the exact data fields required. Use case report forms (eCRFs) that collect only necessary pseudonymous identifiers and biomarker data.

2. Data Collection & Entry:

- System: Use a validated Electronic Data Capture (EDC) system compliant with 21 CFR Part 11.

- Procedure: Authorized study personnel enter data from source documents. The system enforces:

- Unique login credentials (HIPAA/Part 11).

- Automatic, secure audit trails for all data entries and changes (Part 11).

- Electronic signature for confirming data entry (Part 11).

3. Data Processing & Analysis:

- Pseudonymization: Replace direct identifiers (name, patient ID) with a study code. The key linking code to identity is stored separately per GDPR.

- Secure Analysis Environment: Perform statistical analysis on data within a secure, access-controlled virtual environment. All access is logged (GDPR/HIPAA/Part 11).

- Integrity Checks: Use version control (e.g., Git) for analysis scripts and checksums for datasets to ensure integrity (Part 11/GDPR).

4. Data Storage & Archival:

- Encryption: Store all datasets, both at rest and in transit, using strong encryption (e.g., AES-256) (GDPR/HIPAA).

- Access Logs: Maintain logs of all accesses to the data archive.

- Retention Policy: Archive data for the sponsor-defined retention period in the validated system. Afterward, data is securely deleted according to a predefined schedule.

Visualizing the Compliance Framework

Diagram 1: Regulatory Interaction in Lab Data Management (Max width: 760px)

The Scientist's Toolkit: Key Research Reagent Solutions

Table 3: Essential Tools for Compliant Data Management

| Item / Solution | Primary Function in Compliance Context |

|---|---|

| Validated Electronic Lab Notebook (ELN) | Provides a 21 CFR Part 11-compliant environment for recording experimental data with audit trails, electronic signatures, and version control. |

| IRB/Ethics Committee-Approved Consent Forms | Essential documents to establish lawful basis (GDPR consent) and HIPAA authorization for using personal/health data in research. |

| Pseudonymization/Coding Tool | Software or procedure to replace direct identifiers with a study code, separating identity from data to support GDPR and HIPAA privacy principles. |

| Part 11-Compliant EDC System | A validated electronic data capture system for clinical trials that enforces data integrity, audit trails, and secure data entry per FDA requirements. |

| Enterprise Encryption Software | Tools to encrypt data at rest (on servers) and in transit (over networks), a key safeguard for GDPR integrity/confidentiality and HIPAA security. |

| Identity & Access Management (IAM) System | Manages user credentials, roles, and permissions, enforcing least-privilege access as required by HIPAA, GDPR, and Part 11. |

| Centralized Log Management System | Aggregates and secures audit logs from various systems (ELN, EDC, servers) for monitoring and demonstrating compliance accountability. |

| Standard Operating Procedures (SOPs) | Documented protocols for data handling, security incidents, and system validation, providing the organizational framework for all compliance efforts. |

Adhering to GDPR, HIPAA, and 21 CFR Part 11 is not merely a legal obligation but a concrete manifestation of ethical data management in laboratory research. These regulations provide the structural framework for achieving the ethical principles of respect for persons, beneficence, and justice. By implementing robust, layered technical and organizational controls—such as validated systems, pseudonymization, encryption, and comprehensive audit trails—researchers can ensure data integrity, protect subject privacy, and foster trust in the scientific process, ultimately advancing drug development and biomedical science responsibly.

Implementing Ethical Data Practices: A Step-by-Step Lab Protocol

Within the thesis framework of Ethical guidelines for data management in laboratory settings research, an Ethical Data Management Plan (DMP) is a prerequisite for scientific integrity. For researchers in drug development, an ethical DMP transcends mere data organization. It is a binding framework ensuring that data lifecycle management—from generation in assays and clinical trials to sharing and disposal—adheres to core ethical principles: respect for persons (and data derived from them), beneficence, justice, and stewardship. This guide details the technical implementation of such a plan.

Foundational Ethical Principles & Regulatory Mapping

An ethical DMP operationalizes abstract principles into actionable protocols. The following table maps principles to specific data management requirements.

Table 1: Mapping Ethical Principles to DMP Requirements

| Ethical Principle | DMP Requirement | Technical/Procedural Manifestation |

|---|---|---|

| Respect for Persons/Autonomy | Informed Consent Management | Digital consent records linked to data; dynamic consent platforms for longitudinal studies; explicit data use boundaries. |

| Beneficence & Non-Maleficence | Risk-Benefit Analysis for Data | Anonymization/Pseudonymization protocols; data security risk assessments; controlled data access to prevent misuse. |

| Justice | Equitable Data Access & Benefits | FAIR (Findable, Accessible, Interoperable, Reusable) data implementation; clear data sharing policies that aid underrepresented communities. |

| Stewardship & Integrity | Data Quality & Traceability | Robust metadata standards (e.g., ISA-Tab); audit trails for all data modifications; detailed provenance tracking. |

| Accountability | Compliance & Oversight | Regular compliance audits (GDPR, HIPAA, GLP); defined roles (Data Custodian, PI); documentation of all decisions. |

Core Components of an Ethical DMP for Labs

3.1. Data Collection & Informed Consent Protocols

- Protocol: For human-derived samples (e.g., biopsies for biomarker research), implement a tiered consent model. Participants must consent separately for: 1) primary research, 2) long-term storage, and 3) future use in related studies. Consent forms must use clear language, specifying data types (genomic, proteomic, clinical) and sharing scope (open, collaborative, restricted).

- Protocol for Lab-Generated Data: For non-human data (e.g., high-throughput screening), document all experimental conditions rigorously using standardized templates (e.g., MIAME for microarray, ARRIVE for animal studies) to ensure reproducibility and prevent selective reporting.

3.2. Data Storage, Security, and Anonymization

- Security Protocol: Classify data based on sensitivity (Public, Internal, Confidential, Restricted). Implement encryption (AES-256 for data at rest, TLS 1.3+ for data in transit). Access must be role-based (PI, Post-doc, Technician) and logged. Regular penetration testing is mandatory.

- Anonymization Protocol: For genomic data, use tools like ARX or Amnesia for synthetic data generation or k-anonymization. Direct identifiers must be removed and replaced with a persistent, coded ID. A separate, highly secured key file links codes to identities, accessible only to authorized personnel.

3.3. Data Sharing, Publication, and Reuse Ethics

- Protocol: Prior to public deposition (e.g., in GEO, PDB, or electronic lab notebooks), data must be de-identified and checked for inadvertent re-identification risk. A Data Use Agreement (DUA) must accompany shared data, stipulating allowable uses, prohibiting attempts to re-identify individuals, and requiring citation of the source.

3.4. Data Retention and Disposal

- Protocol: Define retention periods per data type (e.g., 25 years for clinical trial data, 7 years for instrumental raw data post-project end). Secure disposal: for electronic data, use multi-pass overwrite (DoD 5220.22-M) or physical destruction of media; for paper records, use cross-cut shredding followed by incineration.

Implementation Workflow & Accountability

The following diagram outlines the continuous lifecycle and oversight of an ethical DMP.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 2: Key Reagents & Tools for Ethical Data Management in Lab Research

| Item/Category | Function in Ethical DMP Context |

|---|---|

| Electronic Lab Notebook (ELN) | Ensures data integrity, timestamping, and non-repudiation. Provides a secure, version-controlled record of experimental protocols and raw data. |

| Metadata Standards (ISA-Tab, MIAME) | Enable reproducibility and FAIR data principles by structuring experimental metadata (sample characteristics, protocols) in a machine-readable format. |

| Data Anonymization Software (ARX, Amnesia) | Mitigates risk of participant re-identification in shared datasets, upholding beneficence and confidentiality obligations. |

| Secure Biobank/LIMS | Manages sample and derived data linkage with strict access controls, ensuring chain of custody and compliance with consent terms. |

| Data Use Agreement (DUA) Templates | Legal instruments that operationalize ethical sharing by binding secondary users to specific, approved research purposes. |

| Audit Trail Software | Automatically logs all data accesses, modifications, and exports, providing accountability and a verifiable record for compliance audits. |

Quantitative Benchmarks for Ethical Compliance

Current best practices and regulations impose specific quantitative requirements for data management.

Table 3: Key Quantitative Benchmarks for an Ethical DMP

| Metric | Benchmark / Requirement | Rationale |

|---|---|---|

| Data Encryption Strength | AES-256 for data at rest; TLS 1.3 for data in transit. | Industry standard for protecting sensitive health and research data from breach. |

| Access Review Frequency | Bi-annual review of all user access permissions. | Prevents privilege creep and ensures only authorized personnel have data access. |

| Audit Trail Retention | Minimum 6 years, aligned with typical audit cycles (e.g., FDA). | Enables reconstruction of data events for investigations and regulatory reviews. |

| Data Breach Response Time | Notification to supervisory authority within 72 hours (GDPR). | Legal requirement to mitigate harm from potential privacy violations. |

| Minimum Anonymization Standard | k-anonymity with k ≥ 5 for shared clinical data. | Statistically robust threshold to reduce re-identification risk in datasets. |

An Ethical DMP is the operational backbone of responsible research. It transforms ethical mandates into definitive technical specifications, ensuring that the immense value of laboratory data is realized without compromising the trust of participants, the integrity of science, or the legal and moral obligations of the research institution. For drug development professionals, a robust ethical DMP is not an administrative burden but a critical component of credible, reproducible, and socially beneficial science.

Standard Operating Procedures (SOPs) for Data Collection, Entry, and Storage

This document establishes Standard Operating Procedures (SOPs) for data lifecycle management within laboratory research settings. These procedures are a foundational component of a broader ethical framework for research data management, ensuring data integrity, reproducibility, and participant confidentiality in alignment with principles outlined in guidelines from the NIH, FDA, and international bodies like the OECD. Adherence to these SOPs is mandatory for all research personnel to maintain scientific rigor and public trust.

SOP for Data Collection

Pre-Collection Planning and Ethical Approval

- Protocol Registration: All experimental protocols must be pre-registered in a recognized repository (e.g., ClinicalTrials.gov, OSF) before data collection commences.

- Informed Consent: For human subjects research, documented informed consent must be obtained and stored separately from research data.

- Data Collection Plan (DCP): A DCP must be finalized, detailing:

- Variables to be measured (with operational definitions).

- Measurement instruments and their calibration schedules.

- Sample identification and anonymization/pseudonymization protocols.

- Primary and secondary endpoints.

Collection Methodology

- Instrument Calibration: Log all calibration activities using a controlled form.

- Source Data Capture: Collect data directly into electronic formats (e.g., Electronic Lab Notebooks - ELNs, LIMS) whenever possible to minimize transcription error.

- Metadata Capture: Collect critical metadata (e.g., date, time, operator, instrument ID, software version, environmental conditions) concurrently with primary data.

Quality Control During Collection

- Implement routine positive/negative controls within experimental runs.

- For observational studies, ensure inter-rater reliability is calculated and maintained above a pre-defined threshold (e.g., Cohen's κ > 0.8).

Table 1: Minimum Required Metadata for Experimental Data Collection

| Metadata Category | Specific Fields | Format/Standard |

|---|---|---|

| Project Identification | Protocol ID, Principal Investigator | Text |

| Sample Information | Sample ID, Group Assignment, Date of Collection | Text, ISO 8601 (YYYY-MM-DD) |

| Experimental Conditions | Temperature, Humidity, Passage Number, Reagent Lot # | Numeric, Text |

| Personnel & Instrument | Operator Initials, Instrument ID, Software Version | Text |

| Data File Info | File Name, Creation Date, Path in Repository | Text, ISO 8601 |

SOP for Data Entry and Validation

Data Entry Protocol

- Double-Data Entry: For manual entry from analog sources, a two-person independent entry system with reconciliation is required for critical data.

- Validation Rules: Implement field-level validation in data entry forms (e.g., range checks, data type checks, mandatory fields).

- Audit Trail: All data entry and modifications must be recorded in an immutable audit trail that captures who, what, when, and why.

Data Cleaning and Transformation

- Document all data cleaning steps (e.g., handling of outliers, imputation of missing data) in a reproducible script (e.g., R, Python).

- Maintain the raw data set in a read-only format. All transformations create derived data sets.

Table 2: Data Validation Checks and Acceptance Criteria

| Check Type | Description | Example Acceptance Criteria |

|---|---|---|

| Range Check | Value falls within plausible limits. | pH value between 0 and 14. |

| Format Check | Data matches required pattern. | Sample ID matches 'PROJ-XXX-####'. |

| Consistency Check | Logical relationship between fields holds. | 'Sacrifice Date' is not before 'Birth Date'. |

| Completeness Check | Required field is not empty. | No null values in 'Primary Outcome' column. |

SOP for Data Storage, Backup, and Security

Storage Architecture and Naming

- File Naming Convention: Use the structured convention:

ProjectID_ExperimentID_YYYYMMDD_Operator_FileVersion.ext - Directory Structure: Implement a standard, documented folder hierarchy separating Raw, Processed, Analysis, and Documentation data.

Backup and Preservation

- 3-2-1 Backup Rule: Maintain 3 total copies of data, on 2 different media, with 1 copy offsite/cloud.

- Backup Schedule: Incremental backups daily, full backups weekly. Verify backup integrity quarterly.

- Long-Term Archiving: For project completion, archive data in a FAIR-aligned institutional or public repository (e.g., Zenodo, Figshare, dbGaP).

Security and Access Control

- Data Classification: Classify data based on sensitivity (e.g., Public, Internal, Confidential, Restricted).

- Access Management: Implement role-based access control (RBAC). Access to personal health information (PHI) requires additional authentication and audit logging.

- Encryption: Encrypt all data at rest and in transit for Confidential and Restricted levels.

Diagram 1: Ethical Data Management Lifecycle (77 characters)

Experimental Protocols for Data Quality Assurance

Protocol: Assessment of Intra-Assay Precision (Repeatability)

- Objective: To determine the variability in data generated by a single operator using the same instrument and reagents in one session.

- Methodology:

- Prepare a homogeneous sample aliquot.

- Perform the measurement of interest (e.g., concentration, absorbance) in 10-20 technical replicates within a single experimental run.

- Record all results with associated metadata.

- Analysis: Calculate the mean, standard deviation (SD), and coefficient of variation (CV%). SOP acceptance criteria: CV% < [protocol-specific threshold, e.g., 5%].

Protocol: Audit Trail Verification

- Objective: To verify the integrity and completeness of the electronic audit trail.

- Methodology:

- Quarterly, select a random sample of 5% of data transactions from the previous period.

- Manually verify that the audit log entry contains: User ID, Date/Time Stamp, Action (Create, Modify, Delete), and Justification (if required).

- For modified entries, verify the prior value is recoverable.

- Analysis: Report the percentage of entries passing verification. Acceptance: 100% compliance.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Robust Data Management

| Item | Function in Data Management |

|---|---|

| Electronic Lab Notebook (ELN) | Digital system for recording protocols, observations, and raw data in a time-stamped, attributable manner. Essential for audit trails. |

| Laboratory Information Management System (LIMS) | Software for tracking samples, associated data, and workflows. Automates data capture from instruments and manages metadata. |

| Version Control System (e.g., Git) | Tracks changes to code and scripts used for data transformation/analysis, enabling reproducibility and collaboration. |

| Reference Management Software (e.g., Zotero) | Organizes literature and can link citations to specific data sets, supporting provenance. |

| Data Repository (e.g., Zenodo, Institutional) | Provides a stable, citable platform for long-term data archiving and sharing, fulfilling grant requirements. |

| Standardized Reference Materials | Certified materials used to calibrate instruments and validate assays, ensuring data accuracy across time and labs. |

Diagram 2: Data Validation and Cleaning Workflow (55 characters)

Within the broader ethical framework for data management in laboratory research, ensuring data integrity is a foundational pillar. It encompasses the maintenance and assurance of data accuracy and consistency throughout its lifecycle, from initial acquisition in an Electronic Lab Notebook (ELN) to long-term storage in secure databases. Ethical research mandates that data be attributable, legible, contemporaneous, original, and accurate (ALCOA+ principles). Failures in data integrity compromise scientific validity, erode public trust, and in regulated industries like drug development, can lead to severe regulatory and legal repercussions.

The Data Integrity Pipeline: From Capture to Curation

A robust data integrity strategy requires a seamless, traceable pipeline.

The Role of the Electronic Lab Notebook (ELN)

The ELN serves as the primary point of data capture. Its ethical and technical configuration is critical.

- Attributability & Contemporaneous Entry: ELNs enforce user authentication (via LDAP/SSO) and timestamp all entries. Configuring sessions to auto-save or lock after inactivity prevents back-dating.

- Original Data Capture: Modern ELNs integrate directly with instruments (e.g., plate readers, microscopes) via APIs or instrument drivers, ingesting raw data files (.csv, .tiff) to prevent manual transcription errors.

- Protocol Management: ELNs store and version controlled experimental protocols (SOPs), linking them directly to generated data, ensuring methodological reproducibility.

Table 1: Quantitative Comparison of Common ELN Features for Data Integrity

| Feature | Basic ELN | Advanced/Regulated ELN | Function for Integrity |

|---|---|---|---|

| Audit Trail | Manual version history | FDA 21 CFR Part 11 compliant, immutable | Tracks all create, modify, delete actions |

| Electronic Signatures | Username only | Biometric or two-factor authentication (2FA) | Ensures attributability and non-repudiation |

| Direct Instrument Integration | Manual file upload | API-based, automated metadata capture | Prevents transcription error, preserves originality |

| Data Export Format | Proprietary, PDF | Standardized (CDISC, ISA-TAB), machine-readable | Facilitates secure archiving and sharing |

Diagram Title: ELN Data Capture Workflows

Secure Transfer and Database Archiving

Data must be securely transferred from the ELN to a dedicated, managed database (e.g., LIMS, SDMS, or institutional repository).

Experimental Protocol: Validated Data Export and Transfer

- Objective: To ensure data files are transferred from the ELN to a secure database without corruption or alteration.

- Materials: ELN with API access, secure file transfer protocol (SFTP) server or REST API endpoint, checksum utility (e.g., SHA-256).

- Methodology:

- Within the ELN, the researcher finalizes the experiment and selects data for export.

- The ELN system generates a package containing all raw data, metadata, and protocol links.

- Before transfer, the system computes a cryptographic hash (SHA-256) of the data package.

- The package and its hash are transferred via a secure, encrypted channel (e.g., SFTP, HTTPS) to the pre-configured database ingestion endpoint.

- The receiving database computes the hash of the incoming package.

- A validation script compares the source and destination hashes. A match confirms data integrity. A mismatch triggers an alert and the transfer is logged as failed.

- Upon successful validation, the data is written to the immutable storage layer of the database, and its location is indexed.

The Secure Database: Immutability and Access Control

The final repository must enforce long-term integrity.

- Immutable Storage: Utilizes Write-Once-Read-Many (WORM) or append-only storage to prevent deletion or overwriting.

- Redundancy: Implements geographically distributed replication (e.g., 3-2-1 backup rule) to protect against data loss.

- Access Logs: Maintains detailed, immutable logs of all data access attempts, queries, and user actions, crucial for auditability.

Diagram Title: Secure Database Architecture & Audit

The Scientist's Toolkit: Research Reagent Solutions for Data Integrity

Table 2: Essential Digital "Reagents" for Data Integrity

| Item | Function in Data Integrity Pipeline |

|---|---|

| Cryptographic Hash Function (SHA-256) | Digital fingerprint for file; verifies data has not been altered during transfer or storage. |

| API Keys & Tokens | Secure credentials allowing automated, permissioned communication between instruments, ELNs, and databases. |

| Electronic Signature (Compliant) | A legally binding digital signature ensuring attributability and intent, compliant with regulations like 21 CFR Part 11. |

| Audit Trail Software Module | System component that automatically records the who, what, when, and why of any data-related action. |

| Standardized Data Format (e.g., ISA-TAB) | A structured, metadata-rich file format that ensures data is self-describing and interoperable between systems. |

| Immutable Storage Medium | Hardware/software configuration (e.g., WORM drive) that prevents data deletion or modification after writing. |

Experimental Protocol: Validating a Complete Integrity Workflow

- Objective: To demonstrate end-to-end data integrity from instrument to database for a plate reader assay.

- Materials: Microplate reader with API, ELN (e.g., LabArchives, Benchling), SFTP server, secure database with ingestion API, Python scripting environment.

- Methodology:

- Assay Execution: Run a standard protein quantification assay (e.g., BCA) in the plate reader. Configure the instrument to push the raw data file (.csv) and run metadata to a watched directory.

- Automated ELN Capture: A directory monitoring script (e.g., Python Watchdog) triggers upon file creation. It authenticates with the ELN API, creates a new experiment entry with the current user and timestamp, and attaches the raw file. The script records the ELN-generated unique ID for the entry.

- ELN Curation: The researcher adds contextual metadata (sample IDs, reagent lots) in the ELN, then applies an electronic signature to lock the record.

- Scheduled Export: A nightly job queries the ELN API for signed experiments. It packages the raw data, metadata, and a PDF audit report. It computes an SHA-256 hash.

- Secure Transfer & Validation: The job transmits the package and hash to the database's HTTPS endpoint. The database's ingestion service recalculates the hash, validates it, and on success, writes the package to its immutable object store (e.g., Amazon S3 with object lock). The success/failure is logged to the system audit trail.

- Verification Test: Manually query the database index using the sample ID and cross-reference the retrieved raw data against the original file on the plate reader to confirm fidelity.

By implementing these technical and procedural controls within an ethical framework, laboratories can create a demonstrably trustworthy environment for research data throughout its lifecycle.

Within the framework of ethical guidelines for data management in laboratory research, formalized agreements are critical for ensuring responsible stewardship. Collaboration Agreements (CAs) and Material Transfer Agreements (MTAs) serve as the legal and ethical bedrock for sharing data and proprietary materials. They operationalize principles of fairness, transparency, and reciprocity, protecting intellectual property while fostering scientific advancement.

Quantitative Landscape of Data & Material Sharing Agreements

Recent surveys illustrate the prevalence and challenges associated with data and material sharing in research.

Table 1: Key Metrics in Academic Data & Material Sharing (2023-2024)

| Metric | Value (%) | Primary Challenge Cited |

|---|---|---|

| Researchers involved in sharing data | 78% | Unclear ownership/IP terms (45%) |

| Projects utilizing MTAs | 65% | Administrative delays >60 days (55%) |

| CAs with explicit data management plans | 52% | Defining "background" vs. "foreground" IP (38%) |

| Instances of sharing denied due to MTA issues | 31% | Publication restrictions (40%) |

| Agreements with ethical use clauses | 68% | Compliance monitoring (50%) |

Core Components of Ethical Agreements

Collaboration Agreements (CAs)

A CA defines the terms of a joint research project. Key ethical and technical clauses include:

- Purpose & Scope: A precisely defined research plan.

- Contributions: Detailed list of data, materials, and resources each party provides.

- Data Management Plan (DMP): Specifies formats, metadata standards (e.g., ISA-Tab), storage, security (encryption at rest/in-transit), and sharing timelines.

- Intellectual Property (IP): Clear definitions of background IP (pre-existing) and foreground IP (arising from the project), including invention disclosure procedures.

- Publication & Authorship: Adherence to ICMJE guidelines, with a defined review period (typically 30-60 days) to protect IP.

- Termination & Data Disposition: Protocols for archiving or destroying data upon project conclusion, aligned with FAIR principles where applicable.

Material Transfer Agreements (MTAs)

MTAs govern the transfer of tangible research materials (e.g., cell lines, plasmids, chemical compounds). Key provisions include:

- Defining the Material: Unique identifier, version, and any relevant genomic or chemical metadata.

- Restrictions on Use: Limited to the specific research outlined in the agreement. Prohibitions on human/clinical use, commercial use, or reverse engineering unless explicitly permitted.

- Safety & Compliance: Requiring adherence to biosafety (NIH BMBL), chemical safety, and animal welfare regulations.

- Results & IP: Stipulations regarding ownership of modifications or new inventions created using the material.

- Liability & Warranty: Typically, materials are provided "as-is" with no warranty.

Protocol for Implementing an Ethical Data Sharing Framework

This methodology outlines steps for establishing a compliant data sharing process under a CA or MTA.

Experimental Protocol: Ethical Data Sharing Workflow

Title: Protocol for Secure, Agreement-Compliant Data Transfer and Use.

Objective: To ensure the secure, ethically compliant, and traceable transfer of research data between institutions under a governing CA/MTA.

Materials: See "Scientist's Toolkit" (Section 6).

Procedure:

- Pre-Transfer Compliance Check:

- Verify that the intended data use is within the scope defined in the executed CA/MTA.

- Confirm that all necessary IRB/IACUC approvals and consent forms permit the proposed sharing.

- Data De-identification & Anonymization:

- For human subject data, apply a validated de-identification protocol (e.g., HIPAA Safe Harbor method). Remove all 18 designated identifiers.

- Use pseudonymization with a secure, separate key file if re-identification potential is required.

- Data Packaging & Documentation:

- Format data according to agreed standards (e.g., genomic data in FASTQ/FASTA; phenotypic data in CSV with controlled vocabulary).

- Generate a README file detailing structure, column definitions, units, and any processing steps.

- Create a comprehensive metadata file using an agreed schema (e.g., JSON-LD following schema.org standards).

- Secure Transfer:

- Encrypt the data package (data, README, metadata) using AES-256 encryption.

- Transmit the encrypted package via a secure, auditable file transfer platform (e.g., SFTP, Globus).

- Transmit the decryption key via a separate communication channel (e.g., institutional encrypted email).

- Accession & Audit Trail:

- The recipient institution logs the data receipt, checks for integrity (e.g., via checksum), and stores it in a secure, access-controlled environment.

- Both parties update their internal data catalogs with the transfer record, including DOI if assigned.

Visualizing Key Workflows and Relationships

Title: Governance of Data & Materials in Research Agreements

Title: Ethical Data Sharing Protocol Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Tools for Managing Data & Material Transfers

| Tool Category | Specific Item/Software | Function in Ethical Sharing |

|---|---|---|

| Data Anonymization | ARX Data Anonymization Tool, sdcMicro | De-identifies sensitive human subject data with risk assessment metrics. |

| Secure Transfer | Globus, SFTP Server (e.g., OpenSSH), Box | Provides encrypted, logged, and reliable large-scale data transfer. |

| Metadata Management | ISAcreator (ISA-Tab), OMOP Common Data Model | Standardizes experimental metadata to ensure reproducibility and FAIR compliance. |

| Encryption | GNU Privacy Guard (GPG), 7-Zip (AES-256) | Encrypts data packages at rest and prepares them for secure transfer. |

| Agreement Templates | UBMTA, NIH SRA, AUTM Model Agreements | Standardized MTA/CA templates that accelerate negotiations. |

| Data Catalogs | openBIS, DKAN, Custom REDCap Catalog | Tracks data lineage, access permissions, and links to governing agreements. |