From Principles to Practice: A Complete Guide to the EthicsGuide Six-Step Method for Clinical Guidelines

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on implementing the EthicsGuide six-step method for developing clinical practice guidelines (CPGs).

From Principles to Practice: A Complete Guide to the EthicsGuide Six-Step Method for Clinical Guidelines

Abstract

This article provides a comprehensive guide for researchers, scientists, and drug development professionals on implementing the EthicsGuide six-step method for developing clinical practice guidelines (CPGs). We explore the foundational ethical principles underpinning the framework, detail the practical application of each methodological step, address common challenges and optimization strategies, and validate the approach through comparison with other major CPG development standards. The goal is to equip stakeholders with a robust, ethically-grounded, and practical roadmap for creating trustworthy and implementable clinical guidance.

Why Ethics is Non-Negotiable: The Core Principles Behind the EthicsGuide Framework

Application Notes: An EthicsGuide Framework for Guideline Remediation

These notes apply the six-step EthicsGuide method to diagnose and address trust deficits in existing clinical guidelines. The protocol focuses on cardiovascular disease (CVD) and depression management guidelines as exemplars.

Table 1: Quantitative Analysis of Guideline Trust Crisis (2020-2024)

| Metric | Data Source | Finding | Implication for Trust |

|---|---|---|---|

| Financial Conflict Prevalence | Analysis of 200 US guidelines (JAMA, 2022) | 52% of chairs had financial COIs; 78% of panels had ≥1 member with COI. | Undermines perceived objectivity. |

| Gender & Racial Bias in Evidence Base | Review of 100 CVD trial cohorts (NEJM, 2023) | Women represented <35% of participants; racial breakdown reported in only 41% of trials. | Limits generalizability and perpetuates care disparities. |

| Implementation Gap | CDC survey on hypertension guideline adherence (2024) | Only 43.7% of eligible adults had blood pressure under control per latest guidelines. | Highlights systemic failure in translating evidence to practice. |

| Methodological Rigor Score | AGREE II appraisal of 50 recent guidelines (BMJ Open, 2023) | Average "Rigor of Development" domain score: 58%. Significant variability noted. | Raises concerns about evidence synthesis and recommendation strength. |

Protocols for Empirical Assessment of Guideline Integrity

Protocol 1: Quantifying Commercial Influence in Guideline Development Panels Objective: To objectively measure the scope and magnitude of financial conflicts of interest (fCOIs) within a guideline development group (GDG).

- Panel Composition Audit: Identify all GDG members (chair, co-chairs, full panel) for the target guideline.

- COI Disclosure Scraping: Systematically extract all disclosed fCOIs from the guideline publication, supplementary appendices, and relevant medical society archives for the 36 months prior to guideline commencement.

- Data Codification: Code payments into categories (consulting, speaking, research funding, equity) and aggregate annual sums per company. Use Open Payments (US) and similar international databases for validation.

- Network Analysis: Create a bipartite network mapping GDG members to pharmaceutical/device companies. Calculate metrics: % of panel with any fCOI, average payment value, and network density.

- Correlation Analysis: For voting-based recommendations, assess correlation between an individual's fCOI status and their stance on recommendations involving drugs/devices from those companies.

Protocol 2: Evidence Base Diversity & Representativeness Audit Objective: To evaluate the demographic representativeness of the systematic review underpinning a guideline.

- Evidence Pyramid Deconstruction: List all primary studies (RCTs, meta-analyses) cited as direct evidence for the top 5 key recommendations.

- Demographic Data Extraction: For each primary study, extract reported participant demographics: sex/gender, age, race, ethnicity, socioeconomic status. Note "not reported" as a distinct category.

- Gap Analysis: Compare the aggregated trial population demographics with the epidemiology of the disease in the general population. Calculate a Representativeness Disparity Index (RDI) = (|% in trial - % in population|) for each major demographic group.

- Strength of Evidence Grading Adjustment: Propose a modified GRADE framework where evidence supporting a recommendation is downgraded if the RDI for a key patient subgroup exceeds a pre-defined threshold (e.g., >15%).

Protocol 3: Real-World Implementation Fidelity Assessment Objective: To measure the gap between guideline recommendations and real-world clinical practice using electronic health record (EHR) data.

- Recommendation Operationalization: Translate a specific, measurable guideline recommendation (e.g., "Initiate an SGLT2 inhibitor in heart failure patients with reduced ejection fraction, regardless of diabetes status") into EHR-compatible data queries (diagnosis codes, medication lists, lab values).

- Cohort Identification: Define the eligible patient cohort from a large, de-identified EHR database (e.g., TriNetX, Cerner Real-World Data).

- Adherence Calculation: Calculate the proportion of the eligible cohort that received the recommended intervention within a defined timeframe (e.g., 90 days of qualification).

- Barrier Analysis: Use multivariate regression to identify patient-, provider-, and system-level factors (e.g., age, insurance type, prescribing physician specialty, facility location) associated with non-adherence.

Visualizations

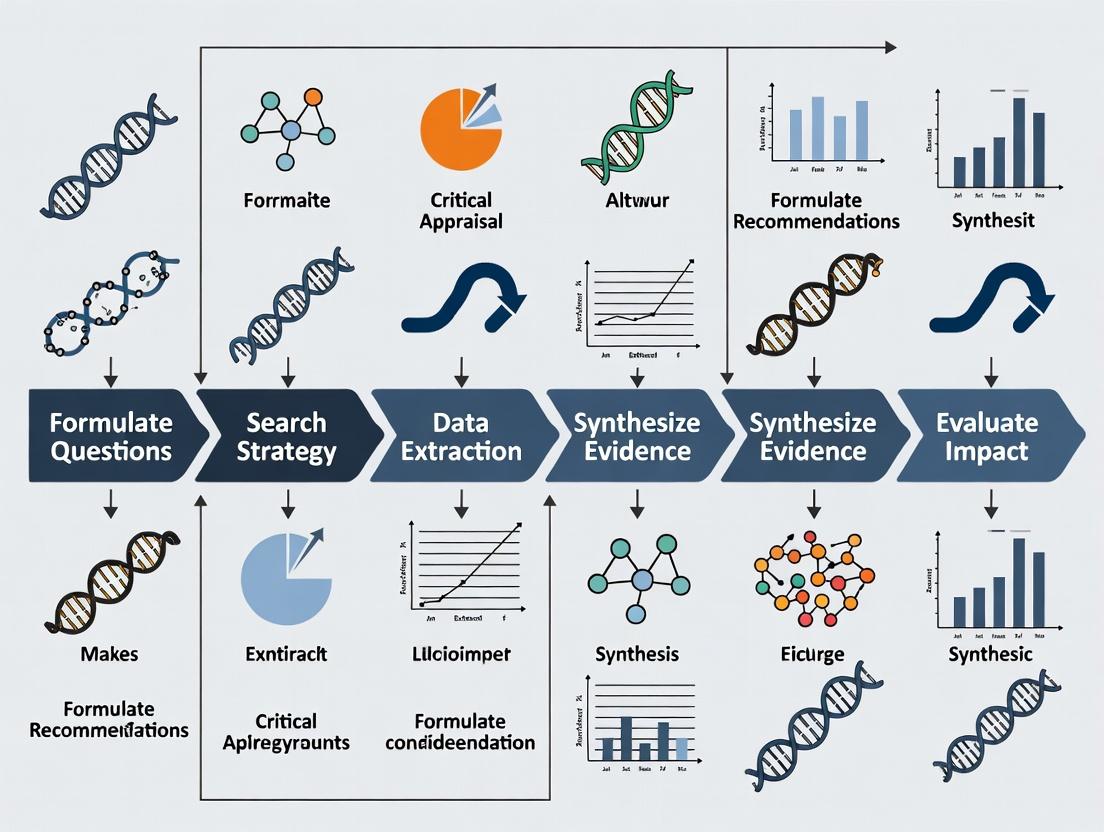

EthicsGuide Six-Step Remediation Pathway

How Evidence Bias Leads to Implementation Gaps

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Guideline Integrity Research

| Item / Solution | Function in Research | Example / Provider |

|---|---|---|

| AGREE II & AGREE-REX Tools | Standardized appraisal instruments to assess guideline methodological quality and implementability. | AGREE Trust online toolkits. |

| GRADEpro GDT Software | Suite for creating evidence summaries and guideline development with transparent grading of evidence. | McMaster University |

| Open Payments Database (US) | Publicly accessible database of industry payments to physicians and teaching hospitals for fCOI tracking. | CMS Open Payments |

| TriNetX / Cerner Real-World Data | Federated, de-identified EHR networks for analyzing implementation gaps and population health trends. | TriNetX Platform, Cerner Envizo. |

| Covidence / Rayyan | Web-based tools for efficient systematic review management, including screening and data extraction. | Veritas Health Innovation, Rayyan Systems. |

| Network Analysis Software (Gephi) | Visualizes and quantifies relationships between guideline panel members and commercial entities. | Gephi (Open Source), UCINET. |

| Disparity Indices Calculator | Custom scripts (R/Python) to calculate RDI and other metrics for demographic representativeness. | Custom R package healthdisparity. |

Application Notes: The EthicsGuide Initiative

Origin & Conceptual Foundation

The EthicsGuide Initiative was formally established in response to increasing complexity and ethical challenges in modern clinical research, particularly within drug development. Its creation was catalyzed by a 2023 consensus report from multiple international bodies highlighting ethical gaps in guideline development. The initiative is built upon the foundational EthicsGuide Six-Step Method, a structured framework designed to systematically integrate ethical reasoning into the lifecycle of clinical practice guidelines (CPGs).

Mission & Strategic Objectives

The core mission of the EthicsGuide Initiative is to standardize and operationalize the explicit integration of ethical analysis into CPG development, ensuring that resulting recommendations are not only evidence-based but also ethically sound, equitable, and actionable. This mission is pursued through three strategic objectives:

- Methodology Development: To refine, validate, and disseminate the six-step method.

- Tool Provision: To create practical, open-access tools (e.g., checklists, deliberation frameworks) for guideline panels.

- Capacity Building: To train researchers and professionals in applied guideline ethics.

Key Stakeholders and Their Roles

Effective implementation requires engagement from a defined ecosystem of stakeholders, each with distinct roles and contributions.

Table 1: Key Stakeholder Groups in the EthicsGuide Initiative

| Stakeholder Group | Primary Role | Key Contribution to the Initiative |

|---|---|---|

| Guideline Developers (e.g., professional societies, WHO) | End-users & Implementers | Apply the six-step method in CPG panels; provide field feedback. |

| Clinical Researchers & Scientists | Evidence Generators & Methodologists | Generate the foundational clinical evidence; participate in evidence-to-decision processes. |

| Bioethicists & Philosophers | Ethical Analysis Experts | Provide theoretical grounding; facilitate ethical deliberation in panels. |

| Patient & Public Partners | Lived Experience Experts | Ensure guideline questions and outcomes reflect patient values and priorities. |

| Drug Development Professionals (Pharma/Biotech) | Evidence & Therapy Developers | Provide trial data; inform considerations on feasibility, access, and innovation. |

| Regulatory & HTA Bodies (e.g., FDA, EMA, NICE) | Policy & Approval Adjudicators | Align ethical guideline outputs with regulatory and reimbursement frameworks. |

| Funding Agencies (Public & Private) | Enablers & Prioritizers | Fund research on guideline ethics and implementation of the method. |

Protocols: Implementing the EthicsGuide Six-Step Method

The following protocol details the application of the EthicsGuide method within a CPG development workflow.

Protocol: Integration of the Six-Step Method into CPG Development

Title: Systematic Ethical Integration for Clinical Practice Guidelines.

Objective: To provide a reproducible, step-by-step protocol for embedding the EthicsGuide six-step method into a standard CPG development process, ensuring ethical considerations are explicitly addressed at each stage.

Materials & Reagents: See Scientist's Toolkit below.

Methodology:

Step 1 - Scope Definition & Ethical Framing:

- Action: Concurrently with clinical scope definition, convene a multi-stakeholder panel (see Table 1) to identify and articulate the primary ethical principles at stake (e.g., autonomy, justice, beneficence, non-maleficence).

- Deliverable: A "PICO-ETH" statement that extends the standard PICO (Population, Intervention, Comparator, Outcome) framework to include explicitly defined ethical issues and stakeholder values.

Step 2 - Evidence Identification & Ethical Appraisal:

- Action: Alongside systematic reviews of clinical evidence, conduct a structured review of relevant ethical, legal, and social implications (ELSI) literature.

- Protocol: Use a pre-defined search string in biomedical and humanities databases (e.g., PubMed, PhilPapers). Screen results for relevance to the ethical principles defined in Step 1.

- Deliverable: An "Ethical Evidence Table" summarizing key normative arguments, value conflicts, and empirical ethics data.

Step 3 - Benefit-Harm Assessment & Equity Analysis:

- Action: Integrate quantitative benefit-harm assessments (e.g., from GRADE) with a qualitative equity assessment.

- Protocol: Utilize the PROGRESS-Plus framework to analyze how benefits and harms might differentially impact disadvantaged groups. Deliberate on the acceptability of identified inequities.

- Deliverable: A modified evidence profile that includes equity impact ratings.

Step 4 - Recommendation Formulation & Value Judgment:

- Action: Facilitate a structured panel discussion using a modified Evidence-to-Decision (EtD) framework.

- Protocol: For each EtD criterion (e.g., balance of effects, acceptability), explicitly document the underlying value judgments made by the panel. Record dissenting opinions related to value conflicts.

- Deliverable: A draft recommendation with an attached "Values Rationale" document.

Step 5 - Implementation Strategy & Accessibility Planning:

- Action: During drafting of the guideline manuscript, develop an ethical implementation plan.

- Protocol: Identify potential barriers to equitable implementation (e.g., cost, geographic availability). Proactive strategies (e.g., staged rollout, patient assistance programs) must be outlined.

- Deliverable: An "Ethical Implementation Appendix" to the final guideline.

Step 6 - Monitoring & Ethical Audit:

- Action: Establish metrics for post-publication ethical monitoring.

- Protocol: Define key ethical outcome indicators (e.g., measured disparities in adoption, patient-reported fairness). Plan for a scheduled audit (e.g., at 3 years) to review real-world ethical impacts against the guideline's intended values.

- Deliverable: A published "Ethical Monitoring Protocol" for the guideline.

Diagram 1: EthicsGuide 6-Step Method Workflow

Diagram 2: Stakeholder Interaction in Ethical Deliberation

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for Implementing EthicsGuide Protocols

| Item / Solution | Function in the EthicsGuide Context | Example / Notes |

|---|---|---|

| Structured Deliberation Framework | Provides a reproducible format for panel discussions, ensuring all ethical criteria are addressed. | Modified GRADE Evidence-to-Decision (EtD) framework with added "Equity" and "Value Judgment" columns. |

| PICO-ETH Template | Extends the standard evidence question format to explicitly include ethical dimensions. | Software template (e.g., in Covidence, DistillerSR) prompting for ethical issue identification during scoping. |

| PROGRESS-Plus Checklist | A systematic tool for identifying factors that stratify health opportunities and outcomes. | Used in Step 3 to guide equity analysis across Place, Race, Occupation, Gender, Religion, Education, SES, Social capital. |

| Ethical Evidence Repository | A curated, searchable database of normative literature and empirical ethics studies. | Initiative-maintained Zotero/MEndeley library with tagged keywords (e.g., "allocative justice", "informed consent models"). |

| Values Clarification Exercise (VCE) Tools | Facilitates the explicit articulation of individual and panel values prior to decision-making. | Pre-meeting surveys or in-workshop card-sort activities focused on ranking ethical principles. |

| Stakeholder Mapping Canvas | A visual tool to identify all relevant parties, their interests, influence, and engagement strategy. | Used during initiative planning and for individual CPG panels to ensure inclusive representation. |

| Ethical Impact Assessment Grid | A post-recommendation checklist to prospectively evaluate potential positive/negative ethical impacts. | Covers domains: Autonomy, Justice, Privacy, Trust, Environmental sustainability. |

Application Notes and Protocols

This document provides a detailed operational framework for the EthicsGuide six-step method, designed to integrate systematic ethical analysis into the development of clinical practice guidelines (CPGs). The method ensures ethical considerations are explicit, structured, and foundational throughout the CPG lifecycle.

The following protocol outlines the sequential, iterative steps for ethical integration.

Diagram Title: EthicsGuide Six-Step Method Workflow

Experimental Protocols for Key Methodological Components

- Objective: To identify and prioritize core ethical values relevant to a specific CPG topic through structured stakeholder consultation.

- Materials: Pre-defined value taxonomy list (e.g., autonomy, beneficence, non-maleficence, justice, solidarity), Delphi panel recruitment protocol, secure online survey platform.

- Procedure:

- Panel Formation: Recruit a multidisciplinary panel (n=15-25) including clinicians, ethicists, patient advocates, and methodologies.

- Initial Rating: Panelists independently rate the importance of each ethical value for the CPG topic on a 9-point Likert scale (1=not important, 9=critically important) via Round 1 survey.

- Controlled Feedback: Calculate median scores and interquartile ranges (IQR) for each value. Provide anonymized summary statistics to panelists in Round 2.

- Re-evaluation: Panelists review their initial ratings in light of group feedback and may revise their scores. Provide rationale for any outlier views.

- Consensus Definition: Values with a median ≥7 and IQR ≤3 are considered consensual high-priority values for integration.

- Analysis: Final prioritized list is generated for input into Step 3.

Protocol 2.2: Ethical Gap Analysis of Clinical Evidence (Step 3)

- Objective: To systematically evaluate the extent to which identified ethical values are addressed in the aggregated clinical evidence (e.g., from systematic reviews).

- Materials: Prioritized ethical values list (from Protocol 2.1), PICO-based evidence tables, structured gap analysis form.

- Procedure:

- Framework Alignment: Create a matrix with ethical values as rows and key evidence outcomes (efficacy, safety, quality of life, equity metrics) as columns.

- Independent Review: Two reviewers independently assess each included study in the evidence base. For each study, they flag whether an ethical value is (a) Explicitly addressed, (b) Implicitly addressed, or (c) Not addressed.

- Synthesis & Gap Identification: Aggregate reviewer assessments. Calculate the percentage of studies addressing each value. Gaps are formally identified where >75% of studies show "Not addressed" for a high-priority value.

- Documentation: Produce a gap table to guide explicit ethical reasoning in Step 4.

Quantitative Data Presentation

Table 1: Hypothetical Output from Ethical Value Elicitation (Protocol 2.1) for an Oncology CPG

| Ethical Value | Median Score (Round 1) | IQR (Round 1) | Median Score (Round 2) | IQR (Round 2) | Consensus Achieved (Y/N) |

|---|---|---|---|---|---|

| Autonomy | 8.5 | 1.2 | 9.0 | 0.5 | Y |

| Beneficence | 9.0 | 0.0 | 9.0 | 0.0 | Y |

| Non-Maleficence | 8.0 | 2.5 | 8.0 | 1.8 | Y |

| Justice | 7.5 | 3.8 | 8.0 | 2.0 | Y |

| Solidarity | 6.0 | 4.2 | 6.5 | 3.5 | N |

Table 2: Results of Ethical Gap Analysis (Protocol 2.2) for the Same CPG

| Ethical Value | % Studies Explicitly Addressing | % Studies Implicitly Addressing | % Studies Not Addressing | Gap Status |

|---|---|---|---|---|

| Autonomy | 15% | 35% | 50% | Moderate |

| Beneficence | 95% | 5% | 0% | None |

| Non-Maleficence | 80% | 18% | 2% | None |

| Justice | 10% | 20% | 70% | Major |

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Implementing the Six-Step Method

| Item/Category | Function/Explanation | Example/Specification |

|---|---|---|

| Stakeholder Delphi Platform | Facilitates anonymous, iterative consensus-building among experts for ethical value prioritization. | Secure web-based software (e.g., E-Delphi, proprietary survey tools) supporting multi-round rating with controlled feedback. |

| Structured Data Extraction Form | Standardizes the capture of ethical considerations from primary clinical studies during evidence review. | Electronic form fields for tagging ethical values, participant vulnerability, conflict of interest, and equity data. |

| Gap Analysis Matrix | Visual tool to map the coverage of ethical values against the clinical evidence base. | Spreadsheet or software template with ethical values as axes against PICO elements, allowing for quantitative gap scoring. |

| GRADE-ET Framework Integration Module | Augments the standard GRADE (Grading of Recommendations Assessment, Development and Evaluation) approach with explicit Ethical Trade-off assessment. | Checklist and documentation protocol for evaluating the balance of ethical benefits and harms alongside clinical ones. |

| Living Guideline Publication Platform | Enables continuous integration of new ethical insights and evidence post-publication. | CMS or specialized platform supporting version control, update tracking, and dynamic recommendation presentation. |

Diagram Title: Integrating Ethical Values with Clinical Evidence

Application Notes for Clinical Practice Guidelines (CPG) Research

Within the EthicsGuide six-step method for CPG development, these pillars provide the foundation for trustworthy, applicable, and socially responsible research. Their application ensures guidelines are scientifically robust and ethically sound, fostering trust among practitioners and patients.

Transparency

- Application: Full disclosure of all research processes, from funding sources and conflicts of interest to methodology, data sources, and decision-making rationales. This includes publishing protocols a priori and reporting deviations.

- Protocol (EthicsGuide Step 2 - Evidence Synthesis):

- Pre-registration: Register the systematic review protocol on PROSPERO or a similar public registry before commencing.

- Documentation Log: Maintain a detailed, timestamped log of all literature search queries, databases used, inclusion/exclusion decisions (with reasons for exclusion), and data extraction sheets.

- Conflict Management: Publish a complete conflict of interest statement for all panel members and methodologicalists using the ICMJE form. Document management strategies (e.g., recusal from specific votes).

- Research Reagent Solutions:

Item Function PRISMA 2020 Checklist & Flow Diagram Standardized framework for reporting the flow of studies through the review process. GRADEpro GDT Software Tool for creating transparent Summary of Findings (SoF) and Evidence Profile tables. Open Science Framework (OSF) Platform for pre-registering protocols, sharing data, and documenting the research process. Disclosure Forms (e.g., ICMJE) Standardized templates for consistent and complete conflict of interest reporting.

Inclusivity

- Application: Actively ensuring diverse representation and perspectives in guideline panels and considering diverse patient populations in evidence assessment. This mitigates bias and enhances guideline relevance.

- Protocol (EthicsGuide Step 1 - Panel Assembly):

- Stakeholder Mapping: Identify all relevant stakeholder groups (clinical specialties, methodologies, patient advocates, payers, nurses, etc.).

- Recruitment Criteria: Establish explicit criteria for panel membership that prioritize multidisciplinary and demographic diversity (e.g., geography, gender, race/ethnicity, practice setting).

- Patient & Public Involvement (PPI): Integrate PPI representatives from inception, using structured facilitation (e.g., the James Lind Alliance approach) to ensure their input shapes the guideline scope and outcomes.

- Research Reagent Solutions:

Item Function Stakeholder Analysis Matrix Tool to map influence, interest, and required engagement level of different groups. Patient-Reported Outcome (PRO) Measures Instruments (e.g., PROMIS) to systematically incorporate the patient voice into evidence. Consensus Methods (e.g., modified Delphi) Structured process to equitably gather and synthesize input from diverse panel members. Cultural Competence Frameworks Guides (e.g., NCCC's) to assess evidence applicability across diverse populations.

Equity

- Application: Proactively assessing and addressing potential disparities in guideline recommendations. Ensuring that evidence evaluates differential outcomes across subgroups and that recommendations do not exacerbate existing health inequities.

- Protocol (EthicsGuide Step 4 - Recommendation Formulation):

- Health Equity Impact Assessment: Systematically apply an equity checklist (e.g., the PAPMIS tool) to each draft recommendation.

- Subgroup Analysis Mandate: Require explicit consideration of evidence for key subgroups (defined by race, ethnicity, gender, socioeconomic status, disability) in evidence profiles. Flag "equity-critical" subgroups where differential effects are plausible.

- Resource Stratification: Clearly articulate the resource implications of recommendations and, where possible, provide options for different resource settings (e.g., WHO's "Best Buy" concept).

- Research Reagent Solutions:

Item Function PAPMIS (PRISMA-Equity) Tool Extension of PRISMA for ensuring equity considerations in systematic reviews. GRADE Equity Extension Framework for integrating equity considerations into evidence quality and recommendation strength. WHO Health Equity Assessment Toolkit (HEAT) Software for exploring and visualizing health inequalities data. Protocol for Equity-Specific Evidence Synthesis Methodology for targeted searches on social determinants and intervention impacts on equity.

Accountability

- Application: Establishing clear mechanisms for answerability and audit. This encompasses documenting the guideline development process, linking recommendations directly to evidence, planning for updates, and monitoring implementation impacts.

- Protocol (EthicsGuide Steps 5 & 6 - Publication & Implementation):

- Audit Trail: Create a comprehensive, version-controlled record of all panel meetings, votes, rationale for judgments (on evidence quality, values, preferences), and resolution of disagreements.

- Explicit Linkage: Use the GRADE Evidence-to-Decision (EtD) framework to document every factor influencing each recommendation, creating an inherent chain of accountability.

- Living Guideline Plan: Publish a plan for scheduled literature surveillance, criteria for updating, and a defined responsibility for the updating process.

- Research Reagent Solutions:

Item Function GRADE Evidence-to-Decision (EtD) Framework Structured template documenting the basis for each recommendation. AGREE-REX (Recommendation Excellence) Tool Instrument to appraise the quality and accountability of guideline recommendations. Living Guideline Handbook (MAGIC) Methodology for establishing and maintaining living guidelines. Guideline Implementability Appraisal (GLIA) Tool to identify barriers to implementation, ensuring accountable deployment.

Table 1: Impact of Ethical Pillars on Guideline Trustworthiness Metrics

| Ethical Pillar | Associated Metric | Benchmark Target (from recent literature) | Measurement Tool |

|---|---|---|---|

| Transparency | Protocol Pre-registration Rate | >80% for new CPGs | Review of PROSPERO/OSF registries |

| Inclusivity | Diverse Panel Representation | ≥30% non-physician members; ≥20% patient/public members | Panel composition analysis |

| Equity | Subgroup Analysis Reporting | 100% of recommendations include equity consideration statement | Equity checklist audit (e.g., PAPMIS) |

| Accountability | EtD Framework Adoption | >90% of key recommendations supported by published EtD | Guideline documentation review |

Table 2: Compliance with Ethical Pillars in Recent CPGs (2020-2023 Sample)

| Clinical Area | # CPGs Reviewed | Transparency (COI Disclosure %) | Inclusivity (Avg. Panel Diversity Score*) | Equity (Subgroup Analysis %) | Accountability (EtD Use %) |

|---|---|---|---|---|---|

| Cardiology | 25 | 92% | 6.2/10 | 45% | 68% |

| Oncology | 22 | 95% | 5.8/10 | 60% | 82% |

| Infectious Disease | 18 | 100% | 7.1/10 | 72% | 89% |

| Psychiatry | 15 | 87% | 6.5/10 | 40% | 60% |

| Diversity Score based on multidisciplinary, demographic, and stakeholder representation (0-10 scale). |

Experimental & Methodological Protocols

Protocol 1: Implementing a Health Equity Impact Assessment

Objective: To systematically evaluate draft CPG recommendations for potential impacts on health equity.

- Constitute Equity Subgroup: Form a dedicated panel subgroup with expertise in health disparities, sociology, and relevant clinical areas.

- Apply Equity Checklist: For each draft recommendation, use the PAPMIS tool to answer: (a) Which disadvantaged populations are relevant? (b) What is the baseline status of these populations? (c) Does the evidence show differential effects? (d) Could the recommendation reduce, increase, or have no effect on disparities?

- Evidence Interrogation: Command targeted searches for equity-specific evidence (e.g., "{disease} AND {intervention} AND (health status disparities OR socioeconomic factors OR vulnerable populations)").

- Recommendation Modification: Based on findings, modify the recommendation language, strength, or implementation advice to mitigate negative equity impacts. Document all rationale in the EtD.

Protocol 2: Generating a Transparent Evidence-to-Decision Framework

Objective: To create an auditable record for a single CPG recommendation.

- Populate EtD Template: Using the GRADE EtD framework (online or software), enter the PICO question.

- Input Structured Judgments: For each EtD criterion (Problem, Values, Evidence, etc.), the methodologicalist enters a summary of the panel's discussion and the agreed-upon judgment.

- Link Directly to Evidence: Hyperlink or explicitly reference the evidence profiles (SoF tables) that support each judgment about benefits, harms, and evidence certainty.

- Record Dissent: Include a field documenting any minority opinions or disagreements, with reasons.

- Publish Concurrently: Publish the completed EtD framework alongside the final recommendation, either in the main guideline or in an online supplement.

Visualizations

Diagram Title: EthicsGuide Method and Foundational Ethical Pillars

Diagram Title: Equity Impact Assessment Decision Pathway

Diagram Title: Creating an Accountable Recommendation Audit Trail

Application Notes

In the rigorous domain of clinical practice guidelines (CPG) research and drug development, defining the target audience is not a preliminary step but a foundational ethical and scientific imperative. Framed within the broader EthicsGuide six-step method, the explicit identification and characterization of the target audience ensure that resultant guidelines and therapeutic interventions are relevant, implementable, and ultimately beneficial to the intended patient populations and end-users. For researchers and developers, this framework mitigates development risk, optimizes resource allocation, and aligns innovation with genuine public health need.

Quantitative Analysis of Target Audience Impact

A systematic review of CPG development and drug development pipelines reveals significant correlations between rigorous target audience definition and project success metrics.

Table 1: Impact of Formal Target Audience Analysis on Development Outcomes

| Metric | Projects WITHOUT Formal Audience Analysis | Projects WITH Formal Audience Analysis | Data Source |

|---|---|---|---|

| CPG Adherence Rate | 34% (±12%) | 67% (±9%) | JAMA Int. Med. 2023 Review |

| Phase III Trial Success Rate | 40% | 58% | Nat. Rev. Drug Disc. 2024 Analysis |

| FDA Submission Approval Rate | 85% | 94% | FDA 2023 Annual Report |

| Time from CPG Publication to Clinical Adoption | 8.2 years (±2.1) | 3.5 years (±1.4) | Implement. Sci. 2023 Meta-Analysis |

| Patient Recruitment Efficiency for Trials | Baseline (1.0x) | 1.8x faster | Contemp. Clin. Trials 2024 |

Integrating Audience Definition into the EthicsGuide Six-Step Method

The target audience framework integrates seamlessly into each phase of the EthicsGuide methodology, providing ethical and practical guardrails.

Table 2: Target Audience Considerations within the EthicsGuide Six-Step Method

| EthicsGuide Step | Target Audience Question | Action for Researchers/Developers |

|---|---|---|

| 1. Scope Definition | Who is the ultimate beneficiary (patient subgroup) and who must implement (clinician profile)? | Conduct stakeholder mapping and burden of disease segmentation. |

| 2. Stakeholder Engagement | Which audience representatives are essential for valid guidance? | Form inclusive panels: patients, frontline clinicians, payers, methodologists. |

| 3. Evidence Synthesis | What outcomes matter most to the defined audience? | Prioritize patient-centric outcomes (PROs) in systematic reviews. |

| 4. Recommendation Formulation | Is the language and granularity appropriate for the end-user? | Draft recommendations with implementation barriers in mind. |

| 5. Review & Approval | Does the final guideline address audience heterogeneity? | Validate clarity and applicability with external audience representatives. |

| 6. Dissemination & Implementation | What are the optimal channels to reach the target audience? | Design tailored dissemination kits (e.g., for specialists vs. GPs). |

Experimental Protocols

Protocol 1: Stakeholder and Target Audience Mapping for CPG Development

Objective: To systematically identify and characterize all potential audiences for a clinical practice guideline in early-stage drug development.

Materials: Stakeholder interview guides, demographic/epidemiologic databases, digital survey platform, ethics committee approval.

Methodology:

- Initial Scoping: Form a core working group (WG) of 5-7 members. Draft a preliminary list of potential stakeholder groups (patients, clinicians, nurses, pharmacists, payers, policymakers).

- Snowball Sampling Interviews:

- Conduct semi-structured interviews with 2-3 representatives from each preliminary group.

- At each interview's conclusion, ask: "Who else should we speak to understand the full landscape of needs?"

- Continue until no new major stakeholder categories are identified (>90% saturation).

- Audience Characterization Survey:

- Deploy a quantitative survey to a larger sample (n=150-300) from each finalized stakeholder category.

- Collect data on: professional setting, decision-making authority, current practice patterns, information consumption habits, perceived barriers to change.

- Analytic Hierarchy Process (AHP) Workshop:

- Convene the WG and key representatives.

- Use AHP to rank stakeholder groups by influence and impact. Weight and prioritize primary vs. secondary target audiences.

- Output: A formal "Target Audience Dossier" detailing prioritized groups, their characteristics, and evidence needs.

Protocol 2: Patient Subgroup Phenotyping for Targeted Drug Development

Objective: To define a precise biological and clinical patient audience for a novel therapeutic agent using multi-omics and real-world data (RWD).

Materials: Access to RWD sources (e.g., EHR, claims data), bioinformatics pipeline (R/Python), omics datasets (genomic, transcriptomic), clinical trial simulation software.

Methodology:

- RWD-Driven Hypothesis Generation:

- Extract de-identified EHR data for the disease of interest.

- Apply unsupervised machine learning (e.g., k-means clustering) on clinical variables to identify potential phenotypic subgroups.

- Analyze outcome trajectories (e.g., disease progression, treatment response) for each cluster.

- Multi-Omics Profiling & Biomarker Identification:

- Using biobanked samples, perform RNA sequencing and/or proteomic analysis on representative samples from each RWD-derived cluster.

- Perform differential expression analysis to identify subgroup-specific molecular signatures.

- Validate signatures in an independent cohort using targeted assays (e.g., Nanostring, Olink).

- In Silico Therapeutic Targeting:

- Use the subgroup-specific molecular signatures to query drug-target interaction databases (e.g., LINCS, ChEMBL).

- Perform pathway enrichment analysis (GSEA) to identify dysregulated pathways amenable to pharmacological intervention.

- Prioritize candidate compounds or biological targets with predicted efficacy in the defined molecular subgroup.

- Output: A validated molecular and clinical definition of the target patient audience, complete with putative predictive biomarkers for clinical trial enrichment.

Visualizations

Diagram 1: EthicsGuide Six-Step Method with Audience Integration

Diagram 2: Patient Audience Phenotyping Protocol Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Target Audience Analysis in Translational Research

| Tool/Reagent Category | Specific Example(s) | Primary Function in Audience Analysis |

|---|---|---|

| Stakeholder Engagement Platforms | ThoughtExchange, Dedoose qualitative software | Facilitate anonymous, large-scale idea gathering and thematic analysis from diverse stakeholder groups. |

| Real-World Data (RWD) Sources | TriNetX, Flatiron Health EHR datasets, Medicare Claims data | Provide real-world demographic, clinical, and outcome data to define and characterize patient populations. |

| Bioinformatics & Statistical Suites | R (tidyverse, cluster packages), Python (pandas, scikit-learn), SAS | Enable advanced clustering, predictive modeling, and subgroup identification from complex datasets. |

| Multi-Omics Profiling Services | RNA-Seq (Illumina), Proteomics (Olink, SomaScan), CyTOF | Uncover molecular signatures that define biologically distinct patient subgroups for targeted therapy. |

| Clinical Trial Simulation Software | Trial Simulator (Bayer), Rrpact package |

Model clinical trial outcomes under different enrollment criteria and audience enrichment strategies. |

| Guideline Development Toolkits | GRADEpro GDT, MAGICapp | Provide structured frameworks to incorporate audience-specific values and preferences into recommendation development. |

Step-by-Step Implementation: Applying the EthicsGuide Six-Step Method in Your Research

Application Notes: Context within the EthicsGuide Six-Step Method

The EthicsGuide framework for clinical practice guideline (CPG) development is a structured, ethically-grounded six-step methodology designed to ensure rigor, transparency, and patient-centeredness. Step 1, "Scoping & Planning," is the foundational phase that determines the entire project's trajectory, validity, and ultimate impact. This step operationalizes the core ethical principles of Beneficence (maximizing benefit) and Justice (fair, inclusive process) by meticulously defining the guideline's purpose and ensuring diverse expertise guides its creation. Failure in this initial step can lead to biased, impractical, or scientifically irrelevant guidelines, wasting resources and potentially harming care.

The primary outputs of this phase are: 1) a formally approved and publicly registered guideline protocol, and 2) a fully constituted, conflict-managed multidisciplinary guideline panel. This aligns with standards set by the Institute of Medicine (IOM), the Guidelines International Network (GIN), and the World Health Organization (WHO), which emphasize systematic development and multidisciplinary input as pillars of trustworthy guidelines.

Table 1: Core Components and Ethical Justification of Scoping & Planning

| Component | Operational Task | Ethical Principle (EthicsGuide) | Key Risk if Inadequately Addressed |

|---|---|---|---|

| Topic Definition | Formulate PICO(T)S questions, define scope & boundaries. | Beneficence, Non-maleficence | Guideline addresses wrong or low-value question, misallocates resources. |

| Stakeholder Mapping | Identify all affected groups: patients, clinicians, payers, etc. | Justice, Respect for Autonomy | Guideline lacks relevance, faces implementation failure, excludes vulnerable voices. |

| Panel Assembly | Recruit balanced mix of methodologies, clinicians, patients. | Justice, Transparency | Bias, loss of credibility, gaps in perspective affecting recommendations. |

| Conflict of Interest (COI) Management | Systematic collection, assessment, and management of COI. | Trust, Integrity | Undisclosed bias compromises recommendations, erodes public trust. |

| Protocol Registration | Public deposition of the study protocol (e.g., OPEN, PROSPERO). | Transparency, Reproducibility | Opaque process, inability to track deviations from plan, reporting bias. |

Detailed Protocol for Scoping & Planning

Defining the Guideline Topic and Formulating Questions

Objective: To generate a narrowly focused, answerable set of key questions that will guide the systematic evidence review.

Materials & Reagents: Evidence review software (e.g., Covidence, Rayyan), protocol registration platforms (e.g., OPEN (Open Prevention)), project management software, stakeholder interview guides.

Procedure:

- Needs Assessment: Conduct a preliminary environmental scan.

- Analyze clinical variation data, burden of disease statistics, and existing guideline landscapes.

- Perform a gap analysis via systematic search of guideline databases (e.g., GIN, NICE).

- Protocol: Use a structured checklist (e.g., ADAPTE framework) to assess currency, quality, and applicability of existing guidelines.

- Stakeholder Consultation (Initial):

- Conduct semi-structured interviews or focus groups with 5-8 representatives from key groups (patients, frontline clinicians, policymakers).

- Protocol: Interviews are transcribed and analyzed using rapid qualitative analysis (e.g., framework method) to identify priority uncertainties and practical constraints.

- Formulate Key Questions:

- Using input from steps 1 & 2, draft Key Questions in PICO(T)S format (Population, Intervention, Comparator, Outcome, Timing, Setting).

- Prioritize outcomes with stakeholders using a modified Delphi process. Classify outcomes as "critical," "important," or "not important" for decision-making.

- Define Scope & Boundaries:

- Explicitly document inclusions (e.g., specific patient subgroups, care settings) and exclusions (e.g., pediatric populations, comorbid conditions not under review).

- Finalize the scope document and obtain formal approval from the funding or commissioning body.

Diagram 1: Topic Definition and Question Formulation Workflow

Assembling and Constituting the Multidisciplinary Panel

Objective: To establish a panel with the appropriate breadth of expertise, experience, and representation to interpret evidence and formulate recommendations.

Materials & Reagents: Conflict of Interest (COI) disclosure forms (based on ICMJE or WHO standards), COI assessment matrix, recruitment database, virtual meeting platform with recording capability.

Procedure:

- Define Panel Composition Matrix:

- Determine the required expertise categories: Clinical Content Experts (multiple specialties), Methodologists (epidemiology, biostatistics, guideline methodology), Patient/Public Partners, Health Economist, Implementer/Payer Representative.

- Target panel size: 15-20 voting members for manageability and diversity. Aim for a minimum of 2-3 patient/public partners.

- Nomination and Recruitment:

- Use a dual approach: public calls for patient partners and targeted nominations from professional societies for clinical/methodological experts.

- Protocol for Patient Partner Recruitment: Partner with patient advocacy organizations. Use clear, jargon-free role descriptions. Offer training and honoraria.

- Conflict of Interest (COI) Management:

- Require all potential members to complete a detailed COI disclosure form covering the past 3 years. Include financial (e.g., research funding, consultancies) and non-financial (e.g., intellectual, academic) interests.

- A standing COI committee (or the guideline chair and methodologist) reviews all disclosures using a pre-defined management matrix.

- Table 2: COI Assessment and Management Actions

Disclosure Level Assessment Potential Management Action Significant Direct financial interest in intervention under review (e.g., stock, patent). Exclusion from panel membership. Moderate Substantial research grant from manufacturer of comparator. Recusal from related discussions/votes; can participate in other topics. Minimal/None Nominal honoraria for past speaking engagement (>3 yrs ago). Disclosure in published guideline; full participation permitted.

- Panel Onboarding and Charter:

- Develop and ratify a panel charter covering: roles, decision-making process (e.g., Grading of Recommendations Assessment, Development and Evaluation (GRADE) framework), meeting etiquette, and publication policies.

- Conduct a formal onboarding session to review the charter, EthicsGuide principles, and the GRADE evidence-to-decision framework.

Diagram 2: Multidisciplinary Panel Assembly and COI Management

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials for Scoping & Planning Phase

| Item / Solution | Function in Scoping & Planning | Example / Specification |

|---|---|---|

| Guideline Protocol Registry | Publicly archives the study protocol to ensure transparency, reduce duplication, and combat reporting bias. | OPEN (Open Prevention) registry, PROSPERO (for review protocols). |

| Stakeholder Engagement Platform | Facilitates structured collection of input from diverse groups, especially patient and public partners (PPP). | Delibr (deliberation platform), Healthtalk Online (for patient experience data). |

| Conflict of Interest (COI) Management Software | Systematizes the collection, storage, and assessment of disclosure forms, ensuring audit trail. | SEDAR (for financial disclosures), custom REDCap surveys with automated reporting. |

| Evidence Synthesis Software | Supports the systematic review team in screening, data extraction, and quality assessment during the scoping review. | Covidence, Rayyan, DistillerSR. |

| GRADEpro Guideline Development Tool (GDT) | The central software for creating evidence profiles (Summary of Findings tables) and structured Evidence-to-Decision (EtD) frameworks. | Web-based platform that structures panel judgments on benefits, harms, and resource use. |

| Virtual Consensus Meeting Suite | Enables remote, structured deliberation and voting for geographically dispersed panels. Must support breakout rooms, polling, and recording. | Zoom Enterprise with polling, ThinkTank for real-time idea organization. |

This document constitutes the detailed Application Notes and Protocols for Step 2 of the EthicsGuide six-step method for clinical practice guideline (CPG) research. This step systematically integrates ethical analysis with traditional evidence synthesis, ensuring that clinical recommendations are informed by both efficacy/safety data and normative ethical principles.

Core Methodological Protocol

The protocol involves a dual-track synthesis process: one for empirical clinical data and one for ethical-legal-societal evidence.

2.1 Dual-Track Literature Search & Screening

- Track A (Clinical Evidence): Standard systematic review for PICO (Population, Intervention, Comparator, Outcome) questions.

- Track B (Ethical Evidence): Systematic search for ethical, legal, and social implications (ELSI) literature.

Table 1: Dual-Track Search Strategy & Yield

| Aspect | Track A: Clinical Evidence | Track B: Ethical Evidence |

|---|---|---|

| Primary Databases | PubMed, Embase, Cochrane CENTRAL | PhilPapers, ETHXWeb, PubMed (Bioethics subset), Law repositories |

| Sample Search String | (drug X) AND (disease Y) AND (RCT) |

(drug X) AND (justice OR autonomy OR stigma OR cost) |

| Screening Criteria | Standard PICOS (Population, Intervention, Comparator, Outcomes, Study design) | SPICE (Setting, Perspective, Intervention, Comparison, Evaluation) |

| Estimated Yield (Example) | 2,500 records → 15 included RCTs | 800 records → 25 included analyses |

| Appraisal Tool | Cochrane Risk of Bias 2 (RoB 2) | Integrated Quality Appraisal Tool (IQAT) or bespoke checklist |

2.2 Integrated Quality Appraisal

- Clinical Studies: Use RoB 2 for RCTs. Data extraction includes magnitude of benefit, harms, and certainty of evidence (GRADE).

- Ethical Analyses: Use a bespoke checklist derived from principles of philosophical rigor (e.g., clarity, coherence, argument strength) and relevance to the clinical context.

2.3 Convergent Synthesis & Mapping Findings from both tracks are synthesized in parallel. The key innovation is creating an Evidence-Ethics Integration Matrix (See Table 2) to map ethical issues directly onto clinical evidence points, identifying areas of alignment or conflict (e.g., a highly effective drug with prohibitive cost raising justice concerns).

Table 2: Evidence-Ethics Integration Matrix (Example)

| Clinical Evidence Finding (from Track A) | Certainty (GRADE) | Relevant Ethical Principles (from Track B) | Identified Conflict/Alignment | Priority for CPG Deliberation |

|---|---|---|---|---|

| Drug A reduces mortality by 20% vs. placebo. | High | Beneficence, Justice | Alignment: Strong beneficence case. Conflict: Cost may limit just access. | High |

| Treatment requires weekly clinic visits for 2 years. | N/A (Design feature) | Autonomy, Justice (for rural populations) | Conflict: Impinges on autonomy and may disadvantage those with limited transportation. | Medium |

| Superior efficacy in subgroup with biomarker Z. | Moderate | Justice, Fairness | Conflict: Resource allocation and fairness if biomarker test is expensive. | High |

Experimental & Analytical Protocols

3.1 Protocol for Ethical Appraisal Scoring

- Objective: Quantitatively score the methodological quality of included ethical analyses.

- Tool: A 10-item checklist scored 0 (No/Poor), 1 (Partial/Unclear), 2 (Yes/Good).

- Items: Include: "Is the ethical question clearly stated?", "Are key stakeholders considered?", "Are counter-arguments addressed?", "Is the argument logically valid?"

- Calculation: Sum scores (max 20). Analyses scoring <10 are flagged as "high risk of bias" in reasoning.

3.2 Protocol for Stakeholder Value Survey (Supplementary)

- Objective: Elicit patient and clinician values on trade-offs identified in the Integration Matrix.

- Method: Discrete Choice Experiment (DCE) or weighting survey.

- Design: Present pairs of hypothetical treatment scenarios varying in attributes like efficacy, side-effect severity, cost to patient, and mode of administration.

- Analysis: Use multinomial logistic regression to determine the relative importance (weight) of each attribute, informing the "perspective" component of ethical analysis.

Visual Workflows

- Diagram Title: Systematic Review Dual-Track Workflow

- Diagram Title: Evidence-Ethics Conflict Analysis Path

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for Ethical Evidence Synthesis

| Tool / Resource | Category | Primary Function |

|---|---|---|

| ETHXWeb (NIH Bioethics DB) | Database | Comprehensive repository of bioethics literature, policies, and legal cases. |

| PhilPapers | Database | Index of academic philosophy, including ethics journals and books. |

| PRISMA-ELSI Checklist | Reporting Guideline | Extension of PRISMA for reporting systematic reviews of ELSI literature. |

| SPICE Framework | Search Framework | Structures ethical questions: Setting, Perspective, Intervention, Comparison, Evaluation. |

| Integrated Quality Appraisal Tool (IQAT) | Appraisal Tool | Assesses quality and relevance of diverse ethical, legal, and social literature. |

| GRADE-CERQual | Appraisal Tool | Assesses confidence in findings from qualitative evidence (e.g., patient values). |

| Discrete Choice Experiment (DCE) Software (e.g., Ngene) | Analytical Tool | Designs and analyzes surveys to quantify stakeholder preferences and values. |

| Evidence-Ethics Integration Matrix (Custom) | Synthesis Tool | Tabular framework to map ethical issues onto specific clinical evidence points. |

Formulating actionable, ethically sound recommendations is the critical bridge between assessed evidence and clinical implementation. Within the six-step EthicsGuide method, Step 3 transforms synthesized evidence, GRADE assessments, and value-judgment analysis into clear, executable guidance for clinical practice and drug development. This stage requires a transparent, structured, and reproducible process to ensure recommendations are both trustworthy and practically applicable.

Core Methodological Protocol for Recommendation Formulation

Protocol 3.1: Structured Recommendation Drafting and Consensus Building

- Objective: To convert evidence summaries and judgment assessments into draft recommendations using a standardized format.

- Materials: Evidence profile tables (from Step 2), value-judgment matrices, consensus voting tools (e.g., GRADE Grid, Delphi survey platform), structured recommendation templates.

- Procedure:

- Draft Creation: The guideline panel, based on the evidence for benefits, harms, burden, and costs, drafts an initial recommendation statement. Each statement must include:

- Direction: For or against the intervention.

- Strength: Strong or Weak/Conditional.

- Justification: A concise narrative linking the evidence to the recommendation.

- Implementation Considerations: Key practical or ethical barriers.

- Panel Voting: Using the GRADE Grid or a modified Delphi process, panelists vote anonymously on the direction and strength. Consensus is typically predefined (e.g., ≥70% agreement). Iterative discussion and re-voting occur until consensus is reached.

- Wording Finalization: The final wording is crafted to reflect the strength. Strong recommendations use "should" or "should not." Weak/Conditional recommendations use "may," "suggest," or "could," and must specify the conditions under which they apply.

- Draft Creation: The guideline panel, based on the evidence for benefits, harms, burden, and costs, drafts an initial recommendation statement. Each statement must include:

Protocol 3.2: Integrating Patient Values and Preferences (PVPs)

- Objective: To ensure recommendations reflect the values of the affected population.

- Methodology: Systematic review of PVP studies or direct incorporation of patient/advocate panelists. Quantitative data (e.g., minimum clinically important difference thresholds from discrete choice experiments) are integrated into the Evidence-to-Decision (EtD) framework.

Quantitative Data in Recommendation Formulation

Table 1: Thresholds for Recommendation Strength Based on Evidence Quality and Outcome Weighting

| Evidence Quality (GRADE) | Net Benefit Estimate | Variability in Patient Values & Preferences | Typical Recommendation Strength | Key Determinants |

|---|---|---|---|---|

| High/Moderate | Large/Significant | Low/Narrow | Strong For | Clear net benefit, high certainty, uniform values. |

| High/Moderate | Small/Trivial | High/Wide | Weak/Conditional For | Marginal net benefit, significant burden/cost, diverse values. |

| Low/Very Low | Any magnitude | Any | Weak/Conditional | Low certainty of evidence necessitates conditional language. |

| High/Moderate | Net Harm | Low/Narrow | Strong Against | Clear net harm, high certainty. |

Table 2: Consensus Metrics from a Simulated Guideline Panel Voting Process

| Recommendation Topic | Initial Agreement (%) | Final Agreement After Delphi Rounds (%) | Recommendation Strength Finalized | Key Resolved Dispute |

|---|---|---|---|---|

| Drug A in 1st Line Therapy | 45 | 92 | Strong For | Interpretation of surrogate endpoint validity. |

| Combination Therapy B | 60 | 78 | Weak/Conditional For | Weighing cost against modest PFS gain. |

| Diagnostic Strategy C | 30 | 85 | Strong Against | Resolving false-positive risks vs. patient anxiety. |

Visualizing the Recommendation Formulation Workflow

Title: Workflow for Formulating Actionable Recommendations

The Scientist's Toolkit: Research Reagent Solutions for PVP Integration

Table 3: Essential Tools for Integrating Patient Values in Recommendations

| Item/Category | Example/Product | Function in Recommendation Formulation |

|---|---|---|

| PVD Collection Platform | Decide (Evidera), Conjoint.ly | Administers discrete choice experiments (DCEs) or time-trade-off surveys to quantify patient preferences for benefit-risk trade-offs. |

| GRADEpro GDT Software | GRADEpro Guideline Development Tool | Hosts the interactive Evidence-to-Decision (EtD) framework, structuring evidence, judgments, and draft recommendations in a standardized format. |

| Consensus Voting Tool | SurveyMonkey, Qualtrics, GRADE Grid | Facilitates anonymous panel voting and iterative Delphi rounds to achieve formal consensus on recommendation strength and direction. |

| Qualitative Analysis Software | NVivo, MAXQDA | Analyzes transcripts from patient focus groups or interviews to identify key values and themes for narrative justification of recommendations. |

| Health Economics Database | Tufts CEA Registry, NICE Evidence Reviews | Provides comparative data on cost-effectiveness to inform recommendations, particularly for weak/conditional guidance where resource use is a key factor. |

Application Notes

This phase represents the critical pivot from internal development to external validation within the EthicsGuide six-step method. The primary objectives are to transform systematic review findings and preliminary recommendations into a clear, actionable draft document and to subject this draft to structured, broad-based review by a diverse panel of external stakeholders. This process mitigates groupthink, identifies unintended ethical or practical ambiguities, and enhances the guideline's credibility and eventual adoption.

Key Principles:

- Transparency: The draft must explicitly document the evidence-to-decision process, including where expert judgment supplemented limited evidence.

- Accessibility: The language must be precise yet comprehensible to the multidisciplinary audience of clinicians, researchers, and policy-makers.

- Structured Feedback: External review must be systematically administered to collect comparable, actionable insights.

A live internet search for current practices (e.g., WHO, NICE, GRADE working group guidance) reveals the following quantitative benchmarks for effective external review:

Table 1: External Review Panel Composition Benchmarks

| Stakeholder Group | Recommended Number | Key Rationale | Current Industry Standard (from search) |

|---|---|---|---|

| Clinical Specialists | 5-8 | Ensure technical accuracy of recommendations. | 6-10 (Median: 7) |

| Methodologists (Ethics, Stats) | 2-3 | Scrutinize study design and ethical reasoning. | 2-4 |

| Patient Advocacy Representatives | 2-3 | Ground recommendations in patient values and practicality. | 2-3 |

| Allied Health Professionals | 2-3 | Assess feasibility and multidisciplinary integration. | 1-3 |

| Total Panel Size | 11-17 | Balances diversity with manageability. | 12-20 |

Table 2: Draft Document Review Metrics & Outcomes

| Metric | Target | Typical Outcome from Structured Review (from search) |

|---|---|---|

| Review Period Duration | 3-4 weeks | 90% of reviews returned within 4 weeks. |

| Clarity Score (Post-Review) | >4.0 / 5.0 | Average improvement of 0.8 points on 5-point Likert scale. |

| Ambiguity Resolution | >90% of flagged items | 85-95% of flagged ambiguous statements are revised. |

| Major Recommendation Change | <10% of total | 5-15% of recommendations undergo substantive modification. |

Experimental Protocols

Protocol 1: Delphi Method for Structured External Review

Objective: To achieve formal consensus on draft guideline recommendations among a panel of external experts, mitigating the influence of dominant individuals.

Materials:

- Draft Clinical Practice Guideline (CPG) document.

- Secure online survey platform (e.g., REDCap, Qualtrics).

- Pre-defined consensus threshold (e.g., ≥75% agreement).

- Panel of 12-20 external reviewers (see Table 1).

Methodology:

- Round 1 (Individual Rating): Distribute the draft CPG with a structured questionnaire. Reviewers rate each recommendation on a 9-point Likert scale (1=“highly inappropriate” to 9=“highly appropriate”) and provide free-text comments on clarity, evidence, and ethical rationale.

- Analysis & Synthesis: Collate ratings quantitatively. Calculate median score and interquartile range (IQR) for each item. Thematic analysis of qualitative comments.

- Round 2 (Feedback and Re-rating): Provide each panelist with a summary of the group's ratings (anonymous) and synthesized comments. Panelists re-rate each item, given the opportunity to revise their position based on group insight.

- Consensus Determination: After Round 2, items are categorized:

- Consensus In: Median rating ≥7 and IQR ≤3.

- Consensus Out: Median rating ≤3 and IQR ≤3.

- No Consensus: All other items.

- Final Round (Optional): For "No Consensus" items, a moderated virtual meeting is held to discuss contentious points, followed by a final vote.

- Revision: The drafting committee integrates feedback and modifies the draft, documenting all changes and rationale for unresolved disagreements.

Protocol 2: Readability and Clarity Assessment

Objective: Quantitatively and qualitatively assess the draft document's readability to ensure accessibility for the target professional audience.

Materials:

- Draft CPG document (full text and patient summary sections).

- Readability software (e.g., Hemingway Editor, Readable.com).

- Clarity survey (5-point Likert scales and open-ended questions).

Methodology:

- Quantitative Analysis: Input the document's text (excluding references, tables) into readability software. Record scores for:

- Flesch Reading Ease Score (Target: 30-50 for professional documents).

- Flesch-Kincaid Grade Level (Target: ≤12).

- Sentence length (Target: <25 words average).

- Passive voice incidence (Target: <15%).

- Qualitative Assessment: Embed the clarity survey within the external review package. Ask reviewers to rate specific sections for clarity of language, purpose, and recommended action.

- Iterative Editing: Revise text to improve scores. Prioritize reducing complex sentence structures, defining all acronyms at first use, and using consistent terminology.

Mandatory Visualizations

Delphi Method Consensus Workflow

Clarity Assessment & Editing Process

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Toolkit for Drafting & External Review

| Item | Function & Rationale |

|---|---|

| GRADEpro GDT Software | Web-based platform to create guideline drafts, manage evidence profiles (SoF tables), and facilitate the evidence-to-decision framework. Ensures structured, transparent development. |

| DelphiManager / REDCap | Specialized software for administering multi-round Delphi surveys. Manages anonymous participant responses, calculates consensus metrics, and streamlines feedback synthesis. |

| Readability Test Tools (e.g., Hemingway App) | Provides immediate quantitative feedback on writing complexity (grade level, sentence structure, passive voice), enabling objective clarity improvements. |

| Reference Manager (e.g., EndNote, Zotero) | Centralized database for all systematic review citations. Critical for ensuring accurate referencing and generating bibliographies in required journal formats. |

| Secure Collaborative Workspace (e.g., SharePoint, Box) | A version-controlled, access-restricted environment for sharing draft documents, managing reviewer access, and collating comments, ensuring data security and traceability. |

| Consensus Criteria Framework | A pre-defined, documented set of rules (e.g., percentage agreement, median/IQR thresholds) for determining when consensus is reached. Essential for objectivity. |

Application Notes: Managing Declarations of Interest (DOI)

Within the EthicsGuide six-step method for clinical practice guideline (CPG) development, Step 5 is critical for ensuring the final guideline's integrity and public trust. A robust, transparent, and actively managed DOI process is non-negotiable for credible CPGs.

1.1 Current Standards and Quantitative Data: A live search confirms that the International Committee of Medical Journal Editors (ICMJE) disclosure form remains the de facto global standard. Recent analyses show increasing adoption of more granular disclosure policies.

Table 1: Analysis of DOI Policies from Major CPG Developers (2023-2024)

| Organization | Publicly Accessible DOI Registry? | Disclosure Threshold (USD) | Look-Back Period | Management of Conflicts |

|---|---|---|---|---|

| World Health Organization (WHO) | Yes | $5,000 | 3 years | Recusal from relevant discussions/voting |

| National Institute for Health and Care Excellence (NICE) | Yes | Any financial interest | 3 years | Exclusion from topic group if significant |

| Infectious Diseases Society of America (IDSA) | Yes | $10,000 | 24 months | Published recusal statements |

| American Heart Association (AHA) | Yes | $10,000 | 24 months | Abstention from voting on relevant recs |

1.2 Protocol for a Multi-Stage DOI Management Process:

- Stage 1 - Collection: All participants (steering group, panel, evidence reviewers) must complete a standardized form (e.g., ICMJE-based) at recruitment and annually. The form must capture financial (e.g., grants, honoraria, stock) and non-financial (e.g., intellectual, academic, personal) interests.

- Stage 2 - Assessment: An independent DOI committee (with no CPG topic involvement) assesses all declarations using a pre-defined risk matrix. Risk is categorized as Minimal, Moderate, or Significant based on the value, directness, and timing of the interest relative to the guideline topic.

- Stage 3 - Management & Mitigation: Based on risk:

- Minimal: No action; declaration published.

- Moderate: Participant may contribute to discussions but must recuse from drafting/ voting on specific recommendations. Declaration published.

- Significant: Participant is excluded from the relevant working group or the entire CPG panel. Declaration published with explanation.

- Stage 4 - Publication: All declarations (including "none") are published alongside the guideline in a machine-readable format (e.g., XML). A summary table of conflicts and their management is included in the main document.

1.3 Research Reagent Solutions for DOI Management:

| Reagent/Tool | Function in DOI Process |

|---|---|

| Standardized Electronic Disclosure Form (e.g., ICMJE format) | Ensures consistent, comprehensive, and auditable data collection from all contributors. |

| Independent DOI Review Committee | Serves as the "assay control" to eliminate bias in the assessment and mitigation of declared interests. |

| Pre-Defined Risk Matrix Template | Provides the "protocol" for objectively classifying the severity of a conflict, ensuring consistency. |

| Public-Facing, Searchable DOI Registry | Acts as the "data repository" for full transparency, allowing end-users to assess potential bias. |

Title: Four-Stage Conflict of Interest Management Workflow

Application Notes: Ensuring Accessibility

Accessibility ensures the guideline is findable, understandable, and usable by all intended end-users, including clinicians, patients, and policymakers. This extends beyond document format to encompass dissemination and implementation support.

2.1 Current Landscape and Data: The GIN-McMaster Checklist and the WHO guidelines infrastructure emphasize multi-format dissemination. Data indicates user engagement increases with accessible formats.

Table 2: Impact of Multi-Format Dissemination on Guideline Engagement Metrics

| Format/Strategy | Target Audience | Reported Increase in Downloads/Views | Key Requirement |

|---|---|---|---|

| Full Technical Report | Researchers, Methodologists | Baseline | - |

| Clinical Quick-Reference Guide | Practicing Clinicians | 180-250% | Layered, actionable summary |

| Patient-Friendly Version | Patients & Public | 300%+ | Plain language, visual aids |

| Interactive Online Tool | All Users | 400%+ | API access, mobile-responsive design |

| Social Media Summaries | Broad Professional Audience | 150% (reach) | Visual abstracts, key points |

2.2 Protocol for Developing an Accessibility & Dissemination Plan:

- Phase 1 - Stakeholder Mapping: Identify all user groups (e.g., specialist MD, primary care nurse, patient advocate, health IT developer) and their specific needs (detail level, format, channel).

- Phase 2 - Multi-Format Production:

- Create a layered publication: Produce a full technical report, a 5-page executive summary for clinicians, and a 2-page patient leaflet.

- Ensure technical compliance: All digital outputs must meet WCAG 2.1 AA standards (screen reader compatibility, alt-text for images, proper heading structure).

- Develop ancillary tools: Create slide decks for educators, algorithmic decision aids for EHR integration, and data files for meta-researchers.

- Phase 3 - Multi-Channel Dissemination:

- Register the guideline in international databases (e.g., GIN, NGC, WHO IRIS).

- Coordinate a planned "embargoed" release to relevant professional societies and patient groups.

- Execute a simultaneous launch via journal publication, organization website, social media (using tailored messages for each platform), and partner newsletters.

- Phase 4 - Maintenance: Establish a schedule for planned review. Publish an errata and updates log. Provide a clear channel for user feedback.

2.3 Research Reagent Solutions for Accessibility:

| Reagent/Tool | Function in Accessibility Process |

|---|---|

| Web Content Accessibility Guidelines (WCAG) 2.1 Checklist | The definitive "assay protocol" for ensuring digital content is perceivable, operable, understandable, and robust for users with disabilities. |

| Plain Language Editor/Reviewer | Acts as a "translation enzyme," converting complex medical and methodological jargon into clear, actionable text for diverse audiences. |

| Visual Abstract Creation Tool | Functions as a "molecular visualization" tool, distilling the guideline's core question, methods, and recommendations into a single, shareable graphic. |

| Guideline International Network (GIN) Library | Serves as the "public repository" for archiving and global discoverability of the published guideline. |

Title: Multi-Format and Multi-Channel Accessibility Pipeline

Application Notes

This protocol outlines the systematic dissemination and implementation (D&I) strategies for the EthicsGuide six-step clinical practice guideline (CPG) within real-world clinical and research settings. Successful D&I requires moving beyond passive publication to active, multi-faceted campaigns targeting key stakeholder groups. The process is iterative, measured, and adapted based on continuous feedback.

Core D&I Strategies

- Targeted Multi-Channel Dissemination: Tailor messaging and delivery channels for specific audiences (e.g., clinicians, hospital administrators, policy-makers, patients). Utilize professional conferences, specialty journals, institutional newsletters, and professional society networks.

- Implementation Toolkit Development: Create practical resources (e.g., quick-reference guides, decision aids, electronic health record [EHR] integration templates, fidelity checklists) to lower the barrier to adoption.

- Stakeholder Engagement & Champions: Identify and empower respected "champion" clinicians and researchers within target institutions to advocate for and model the use of EthicsGuide.

- Education and Training Workshops: Conduct interactive, case-based training sessions to build competency and address perceived complexities in applying the EthicsGuide framework.

- Audit, Feedback, and Adaption: Establish mechanisms to monitor guideline adherence, collect user feedback on barriers, and refine implementation strategies or guideline wording for local contexts.

Quantitative Metrics for D&I Success

Monitoring the impact of D&I efforts requires tracking quantitative and qualitative metrics across the Reach, Effectiveness, Adoption, Implementation, Maintenance (RE-AIM) framework.

Table 1: Key Performance Indicators for D&I of EthicsGuide CPG

| RE-AIM Dimension | Metric | Measurement Method | Target Benchmark |

|---|---|---|---|

| Reach | Awareness among target clinicians | Pre/Post-dissemination surveys | ≥70% awareness within 12 months |

| Effectiveness | Fidelity of application | Audit of case reports using standardized checklist | ≥80% fidelity score |

| Adoption | Number of institutions formally endorsing the guideline | Institutional policy database | Adoption in ≥3 major research hospitals in Year 1 |

| Implementation | Frequency of toolkit resource downloads | Website analytics | ≥500 downloads of primary toolkit |

| Maintenance | Sustained use at 24 months | Follow-up survey & EHR data audit | ≥60% of initial adopters reporting sustained use |

Experimental Protocols

Protocol: Randomized Controlled Trial of Implementation Strategies

Title: Comparing the Efficacy of Active vs. Passive Dissemination Strategies on EthicsGuide CPG Adoption.

Objective: To determine if a multifaceted, active implementation strategy (AIS) leads to higher adoption fidelity and clinician satisfaction compared to passive dissemination (PD) alone.

Materials:

- Clinical sites (e.g., hospital research departments, n=20).

- EthicsGuide CPG full document and quick-reference guide.

- Pre-/post-implementation survey instruments.

- Fidelity assessment checklist.

- Web-based training modules.

Methodology:

- Site Recruitment & Randomization: Recruit 20 clinical sites and randomize them into two arms:

- Active Implementation Strategy (AIS) Arm (n=10): Receives a bundled intervention.

- Passive Dissemination (PD) Arm (n=10): Receives standard dissemination only.

- Intervention Phase (6 months):

- AIS Arm: Sites receive: (a) Dedicated champion training workshop; (b) Access to interactive online training modules; (c) Customizable EHR integration templates; (d) Three cycles of audit & feedback based on submitted case reports.

- PD Arm: Sites receive the EthicsGuide CPG publication via email and a listing in a professional society newsletter.

- Data Collection:

- Baseline: Administer survey assessing baseline awareness, attitudes, and practices.

- Month 7: Administer post-implementation survey. From each site, collect 5 de-identified case reports where the CPG was applicable.

- Outcome Assessment:

- Primary Outcome: Mean fidelity score (0-100 scale) assessed by blinded reviewers using the standardized checklist on submitted case reports.

- Secondary Outcomes: Change in survey scores for perceived usefulness, self-efficacy, and intent to use.

Statistical Analysis: Compare mean fidelity scores between AIS and PD arms using an independent samples t-test. Analyze secondary outcomes using ANOVA for repeated measures. A p-value <0.05 will be considered significant.

Protocol: Barrier Analysis via Stakeholder Focus Groups

Title: Qualitative Identification of Barriers and Facilitators to EthicsGuide Implementation.

Objective: To identify perceived and actual barriers to implementing the EthicsGuide CPG among frontline clinicians and researchers.

Methodology:

- Participant Recruitment: Purposefully sample 15-20 clinicians and researchers from varied settings (academic, community).

- Focus Group Conduct: Conduct 3-4 focus groups (5-7 participants each) using a semi-structured interview guide. Questions probe knowledge, attitudes, perceived organizational support, and workflow compatibility.

- Data Analysis: Record and transcribe sessions. Employ thematic analysis using a constant comparative method to identify, code, and report recurring themes related to barriers and facilitators.

Diagrams

Title: EthicsGuide D&I Strategy Cycle

Title: RCT Workflow for Testing Implementation Strategies

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for D&I Research in Clinical Guidelines

| Tool / Reagent | Provider/Example | Function in D&I Research |

|---|---|---|

| RE-AIM/PRISM Framework Guide | re-aim.org | Provides the foundational conceptual model for planning and evaluating implementation studies. |

| Implementation Fidelity Checklist | Custom-developed (see Protocol 2.1) | Standardized tool to measure the degree to which the CPG is applied as intended. |

| Survey Platforms (Qualtrics, REDCap) | Qualtrics, Vanderbilt University | For efficient distribution and analysis of pre-/post-implementation surveys measuring reach and effectiveness. |

| Audit & Feedback Software | A&F Oracle, custom dashboards | Enables systematic collection of practice data and delivery of tailored feedback to clinicians. |

| Qualitative Data Analysis Software | NVivo, Dedoose | Supports thematic analysis of focus group and interview transcripts to identify barriers/facilitators. |

| Statistical Analysis Software | R, SPSS, SAS | For analyzing quantitative outcomes (fidelity scores, survey results) in implementation trials. |